This site is the archived OWASP Foundation Wiki and is no longer accepting Account Requests.

To view the new OWASP Foundation website, please visit https://owasp.org

Application Security Maturity Model

This is a starter page. I just wanted to get some of the material we had put together on this issue out in a forum where folks could start working on it. Feel free to stomp all over my initial content (dan AT denimgroup.com) Join the discussion on the mail list.

Goals

- Create a maturity model for use in evaluating organizations' application security practices.

- Use other maturity models such as CMMi as guidelines, but focus on creating standards immediately useful to the application security community.

Overview

Organizations and teams vary in the practices they use to promote security in the applications they develop and deploy. There are a number of stakeholders who have a vested interest in understanding the level of maturity of these practices:

- Internal security and audit groups need to know the degree to which development teams are upholding and promoting security policies

- Purchasing organizations need to understand the level of security to expect from software systems they procure from vendors.

The Application Security Maturity Model (ASMM) is intended to provide a structured model against which development organizations can be evaluated so that these stakeholders can have an appropriate understanding of the security state of systems being produced.

Current Material

The SEI CMMi has five levels:

- Initial

- Managed

- Defined

- Quantitatively Managed

- Optimizing

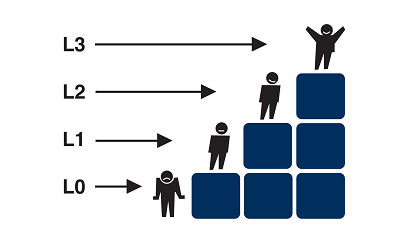

The work we have done thusfar on an ASMM resulted in four levels. (We did not intentionally set out to make our ASMM a direct analog to the SEI CMMi. If folks want to bring ASMM more directly in-line with CMMi then this could be modified. Also if it makes more sense to have 3 or 5 or 22 levels then this can be modified as well. These are simply based on the useful distinctions we have made thusfar between the practices the organizations we work with have adopted and their results) Our levels include:

- Nothing

- Ad Hoc

- Controlled

- Optimizing (probably an inappropriate name at this point; must have had CMMi on the brain...)

What we have been looking at were a number of factors within an organization that were indicative of how seriously they were taking application security and the resources they have decided to devote to these concerns. Some of these include:

- How well does the organization understand its application portfolio?

- How does the organization use automated tools?

- When are security concerns addressed in the process?

- Is the organization reactive or proactive?

- Who within the organization is responsible for application security?

- How many internal personnel have application security as a significant, declared part of their job responsibilities?

- How are developers and other participants trained in application security?

- Is the organization's approach to application security risk qualitative or quantitative?

A rough breakdown of the levels follows:

(L0) Nothing

- The application portfolio is largely unknown. Understanding the organization's application attack surface requires discussions with many people distributed throughout many parts of the organization

- Any audit or compliance check results in a massive "fire drill" effort

(L1) Ad Hoc

- A rough order of magnitude is known for the size of the application portfolio.

- There is some use of automated tools (typically black-box assessment scanning tools run by external consultants or security team personnel). These scans typically result in significant findings of serious defects such as XSS and SQL injection

- Any documented processes or practices are focused on "security features" rather than "secure features" and are only followed sporadically

(L2) Controlled

- The size of the application portfolio is mostly known

- High-value application undergo regular, systematic reviews including some manual testing

- Processes and practices are not solely focused on "security features" versus "secure features" and are typically followed

- Developers receive some training in secure design and development techniques

(L3) Optimizing

- The application portfolio is known and documented and applications are categorized and ranked by their security significance

- The level of review for applications is based on their value and security significance

- High-value applications undergo regular, systematic reviews including manual testing

- Developers are trained in secure coding and design principles

- Security is discussed and addressed through the project development and product acquisition process

Future Development

We have some more data on characteristics of the different levels and other practices but I need to sanitize that before pushing it up. Everything I have uploaded is very early stage, so please feel free to comment and modify as appropriate.

Project Contributors

Dan Cornell dan AT denimgroup.com put this initial page together. Feel free to jump in with edits and comments!