This site is the archived OWASP Foundation Wiki and is no longer accepting Account Requests.

To view the new OWASP Foundation website, please visit https://owasp.org

Review Webserver Metafiles for Information Leakage (OTG-INFO-003)

This article is part of the new OWASP Testing Guide v4.

Back to the OWASP Testing Guide v4 ToC: https://www.owasp.org/index.php/OWASP_Testing_Guide_v4_Table_of_Contents Back to the OWASP Testing Guide Project: https://www.owasp.org/index.php/OWASP_Testing_Project

Summary

This section describes how to test the robots.txt file for Information Leakage of the web application's directory/folder path(s). Furthermore the list of directories that are to be avoided by Spiders/Robots/Crawlers can also be created as a dependency for OWASP-IG-009[1]

Test Objectives

1. Information Leakage of the web application's directory/folder path(s).

2. Create the list of directories that are to be avoided by Spiders/Robots/Crawlers

How to Test

Web spiders/robots/crawlers retrieve a web page and then recursively traverse hyperlinks to retrieve further web content. Their accepted behavior is specified by the Robots Exclusion Protocol of the robots.txt file in the web root directory [1].

robots.txt in webroot

As an example, the beginning of the robots.txt file from http://www.google.com/robots.txt sampled on 11 August 2013 is quoted below:

User-agent: * Disallow: /search Disallow: /sdch Disallow: /groups Disallow: /images Disallow: /catalogs ...

The User-Agent directive refers to the specific web spider/robot/crawler. For example the User-Agent: Googlebot refers to the GoogleBot crawler while User-Agent: * in the example above applies to all web spiders/robots/crawlers [2] as quoted below:

User-agent: *

The Disallow directive specifies which resources are prohibited by spiders/robots/crawlers. In the example above, directories such as the following are prohibited:

... Disallow: /search Disallow: /sdch Disallow: /groups Disallow: /images Disallow: /catalogs ...

Web spiders/robots/crawlers can intentionally ignore the Disallow directives specified in a robots.txt file [3], such as those from Social Networks[2] to ensure that shared linked are still valid. Hence, robots.txt should not be considered as a mechanism to enforce restrictions on how web content is accessed, stored, or republished by third parties.

<META> Tag

<META> tags are located within the HEAD section of each HTML Document and should be consistent across a web site in the likely event that the robot/spider/crawler start point does not begin from a document link other than webroot i.e. a "deep link"[3].

If there is no "<META NAME="ROBOTS"" ... >" entry than the "Robots Exclusion Protocol defaults to "INDEX,FOLLOW" respectively. Therefore, the other two valid entries defined by the "Robots Exclusion Protocol are prefixed with "NO..." i.e. "NOINDEX" and "NOFOLLOW".

Web spiders/robots/crawlers can intentionally ignore the "<META NAME="ROBOTS"" tag as the robots.txt file convention is preferred. Hence, <META> Tags should not be considered the primary mechanism, rather a complementary control to robots.txt.

Black Box testing and example

robots.txt in webroot - with "wget" or "curl"

The robots.txt file is retrieved from the web root directory of the web server.

For example, to retrieve the robots.txt from www.google.com using wget or "curl":

cmlh$ wget http://www.google.com/robots.txt

--2013-08-11 14:40:36-- http://www.google.com/robots.txt

Resolving www.google.com... 74.125.237.17, 74.125.237.18, 74.125.237.19, ...

Connecting to www.google.com|74.125.237.17|:80... connected.

HTTP request sent, awaiting response... 200 OK

Length: unspecified [text/plain]

Saving to: ‘robots.txt.1’

[ <=> ] 7,074 --.-K/s in 0s

2013-08-11 14:40:37 (59.7 MB/s) - ‘robots.txt’ saved [7074]

cmlh$ head -n5 robots.txt

User-agent: *

Disallow: /search

Disallow: /sdch

Disallow: /groups

Disallow: /images

cmlh$

cmlh$ curl -O http://www.google.com/robots.txt

% Total % Received % Xferd Average Speed Time Time Time Current

Dload Upload Total Spent Left Speed

101 7074 0 7074 0 0 9410 0 --:--:-- --:--:-- --:--:-- 27312

cmlh$ head -n5 robots.txt

User-agent: *

Disallow: /search

Disallow: /sdch

Disallow: /groups

Disallow: /images

cmlh$

robots.txt in webroot - with rockspider

"rockspider"[4] automates the creation of the initial scope for Spiders/Robots/Crawlers of files and directories/folders of a web site

For example, to create the initial scope based on the Allowed: directive from www.google.com using "rockspider"[5]:

cmlh$ ./rockspider.pl -www www.google.com "Rockspider" Alpha v0.1_2 Copyright 2013 Christian Heinrich Licensed under the Apache License, Version 2.0 1. Downloading http://www.google.com/robots.txt 2. "robots.txt" saved as "www.google.com-robots.txt" 3. Sending Allow: URIs of www.google.com to web proxy i.e. 127.0.0.1:8080 /catalogs/about sent /catalogs/p? sent /news/directory sent ... 4. Done. cmlh$

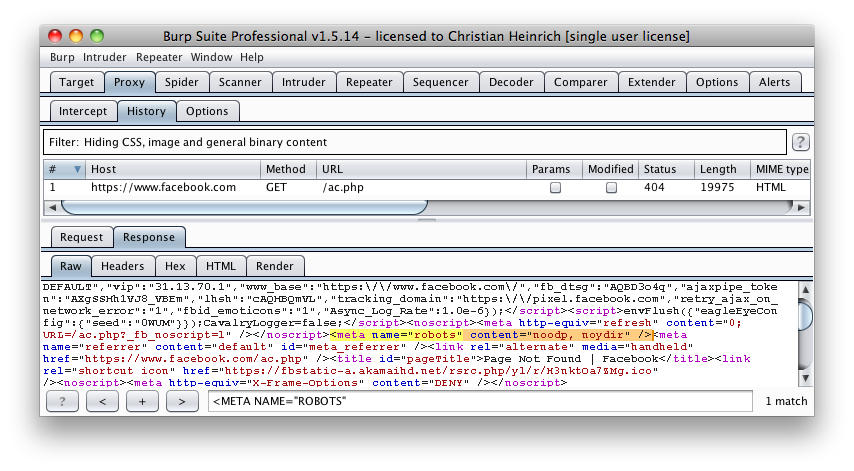

<META> Tags - with Burp

Based on the Disallow directive(s) listed within the robots.txt file in webroot, a regular expression search for "<META NAME="ROBOTS"" within each web page is undertaken and the result compared to the robots.txt file in webroot.

For example, the robots.txt file from facebook.com has a "Disallow: /ac.php" entry[6] and the resulting search for "<META NAME="ROBOTS"" shown below:

The above might be considered a fail since "INDEX,FOLLOW" is the default <META> Tag specified by the "Robots Exclusion Protocol" yet "Disallow: /ac.php" is listed in robots.txt.

Analyze robots.txt using Google Webmaster Tools

Google provides an "Analyze robots.txt" function as part of its "Google Webmaster Tools", which can assist with testing [4] and the procedure is as follows:

1. Sign into Google Webmaster Tools with your Google Account.

2. On the Dashboard, click the URL for the site you want.

3. Click Tools, and then click Analyze robots.txt.

Gray Box testing and example

The process is the same as Black Box testing above.

Tools

- Browser (View Source function)

- curl

- wget

- rockspider[7]

References

Whitepapers

- [1] "The Web Robots Pages" - http://www.robotstxt.org/

- [2] "Block and Remove Pages Using a robots.txt File" - http://support.google.com/webmasters/bin/answer.py?hl=en&answer=156449&from=40364&rd=1

- [3] "(ISC)2 Blog: The Attack of the Spiders from the Clouds" - http://blog.isc2.org/isc2_blog/2008/07/the-attack-of-t.html

- [4] "Block and Remove Pages Using a robots.txt File - http://support.google.com/webmasters/bin/answer.py?hl=en&answer=156449&from=35237&rd=1

- [5] "Telstra customer database exposed" - http://www.smh.com.au/it-pro/security-it/telstra-customer-database-exposed-20111209-1on60.html