This site is the archived OWASP Foundation Wiki and is no longer accepting Account Requests.

To view the new OWASP Foundation website, please visit https://owasp.org

Application Security Guide For CISOs

- Introduction

- Foreword

- Part I: Reasons for Investing in Application Security

- Part II: Criteria for Managing Application Security Risks

- Part III: Selection of Application Security Processes

- 1 PAGE BREAK PAGE BREAK PAGE BREAK PAGE BREAK PAGE BREAK

- 2 Part IV: Selection of Metrics For Managing Risks & Application Security Investments

- 3 References

- 4 About OWASP

- 5 Appendix

- 5.1 Appendix I-A: Value of Data & Cost of an Incident

- 5.2 PAGE BREAK PAGE BREAK PAGE BREAK PAGE BREAK PAGE BREAK

- 5.3 Appendix I-B: Calculation Sheets

- 5.4 PAGE BREAK PAGE BREAK PAGE BREAK PAGE BREAK PAGE BREAK

- 5.5 Appendix I-C: Online Calculator

- 5.6 PAGE BREAK PAGE BREAK PAGE BREAK PAGE BREAK PAGE BREAK

- 5.7 Appendix I-D: Quick CISO Reference to OWASP's Guide & OWASP Projects

PAGE BREAK PAGE BREAK PAGE BREAK PAGE BREAK PAGE BREAK

Part IV: Selection of Metrics For Managing Risks & Application Security Investments

Introduction

The aim of this part of the guide is to help CISO to manage the several aspects of the application security program specifically risk and compliance as well as application security resources such as processes, people and tools. One of the goals of the application security metrics is to measure application security risks as well as compliance with application security requirements mandated by information security standards. Among critical application security processes that the CISO need to report of and manage are application vulnerability management. It is often CISO responsibility for example to report the status of the application security activities to senior management such as the status of application security testing and software security activities in the SDLC. From the risk management perspective it is important that the application security metrics allow to reports on the technical risks such as the un-mitigated vulnerabilities for the applications that are developed and managed by the organization. Another important aspect of the application security metrics is to measure coverage such as the percentage of the application’s portfolio regularly assessed in application security verification program, the percentage of internal apps vs. external apps covered, the inherent risk of these apps and the type of security assessments performed on these applications and when in the SDLC are performed. This type of metrics helps the CISO in reporting on application security process compliance and application security risks to the head of the information security as well as to the application business owners.

Since one of the CISOs responsibilities is to manage both information security and applications security risks and to make decisions on how to mitigate them, it is important for this metrics to be able to measure these risks in terms of vulnerability exposure to the organization’s assets that include application’s data and functions.

Application Security Process Metrics

Metrics and Measurements Goals

The goal of the application security process metrics is to determine how good are the organizations application security processes in meeting the security requirements set forth by application security policies and technical standards. For example an application vulnerability process might include requirements to execute vulnerability assessments on internet facing applications every six or twelve months depending on the inherent risk rating of the application. Another vulnerability process requirement would be to execute security in the SDLC type of processes such as architecture risk analysis/threat modeling, static source code analysis/secure code reviews and risk based security testing on applications that store customer’s confidential information and whose business functionality is a critical service to customers.

From the perspective of process coverage, one of the goals of this metrics might be to report on the coverage of application security process such as to measure how applications fall in scope for application security assessments to identify potential vulnerability assessment gaps based upon application type and the application security requirements. This type of metrics helps the CISO to provide visibility on process coverage as well as the status of the operational execution of the application security programs. For example the metrics might show (e.g. in red status) that some of the application security processes in the SDLC such as secure code reviews are not executed in some of the high risk rated applications and flag this as an out of compliance issue with the security testing requirements. This type of metrics allow the CISO to prioritize resources by allocating them on where is most needed to comply with the standard process requirements.

Another important measurement for application security testing is to measure the time of when the application security processes are scheduled and executed to identify potential delays in the scheduling and execution of application security processes such as secure code review/static source code analysis as well as ethical hacking/application pen testing.

Application Security Risk Metrics

Vulnerability Risk Management Metrics

One of CISO responsibilities is to manage application security risks. From technical risk perspective, application security risks might be due to vulnerabilities in the applications that might expose the application assets such as the data and the application critical functions to potential attacks seeking to compromise the data and/or the critical functions that the application provides. Typically, technical risk management consists on mitigate the risks posed by vulnerabilities by applying fixes and countermeasures. The mitigation of the risk of these vulnerabilities is typically prioritized based upon the qualitative measurement of risks. For example, for each application that is developed and managed by the organization that would be a certain number of vulnerabilities identified at high, medium and low risk severity. The higher the number of high and medium risk vulnerabilities the higher is the risk to the application. The higher the value of the data assets protected by the application and the criticality of the functions supported, the higher the impact of these vulnerabilities on the application assets.

One important emphasis that is given in the vulnerability metrics is the determination of the number of vulnerabilities that are still not fixed. A given number of application vulnerabilities might still be “open” that is not yet fixed in production environment: these represent a risk to the organization and require the CISO to prioritize the risk mitigating action such as “closing” the vulnerability within the compliance timeframes that is deemed acceptable by the application vulnerability management standards.

Security Incident Metrics

Another CISO important metrics for the managing of information security risks is the reporting of security incidents for applications that are developed and/or managed by the organization. The CISO might gather this data from reported from SIRT (Security Incident Response Team Incidents) that affect a given application such as breaches of data as results of an exploit of a vulnerability. The correlation of the security incidents reported for a given application with the vulnerabilities reported by security testing allows the CISO to prioritize the risk mitigation effort on mitigating vulnerabilities that might cause the most impact to the organization. Obviously, waiting for a security incident to occur to decide which vulnerabilities to mitigate is symptomatic of a reactive rather than proactive approach toward risk management.

Threat Intelligence Reporting and Attack Monitoring Metrics

Risk proactive organizations do not wait for security incidents to occur but rather learn from information about attacks and threat intelligence and use that information to take proactive risk mitigation measures such as to develop and implement countermeasures yet mitigating all high risk known vulnerabilities that might be potentially exploited in an incident to cause the most impact to the organization. The CISO can use threat intelligence reports as well as metrics from monitored application layer security events such as from SIEM (Security Incident Event Management) systems to assess the level of risk. Unfortunately today, most of security incidents are discovered and reported only after months from the initial intrusion or data compromise. A security metrics that is actionable toward preventing risks of attacks is of critical importance for CISO since it might allow deciding which applications to put under alert and monitoring and to act quickly in the case of an attack. For example a threat alert of a possible distributed denial of service against online banking applications might allow the CISO to put the organization on alert and prepare to roll out countermeasures to prevent outage. A reported threat of malware targeting online banking applications to steal user credentials and conduct un-authorized financial transactions for example allows the CISO to issue monitoring alerts for the online banking application secure incident event monitoring management team.

Security in SDLC Management Metrics

Metrics for Risk Mitigation Decisions

Once vulnerabilities are identified the next step is to decide which should be fixed and when and how should be fixed. The first question can be answered by the vulnerability assessment process compliance requirements that might require for example of high risk type of vulnerabilities to be remediated in shorter time frames than medium and low risk type of vulnerabilities. The requirement might also vary depending on the type of application, being for example a totally newly developed application versus a new release of an existing application. Since new applications were not security tested before, they represent higher risks than existing applications and therefore this might require that high risk vulnerabilities to be mitigated prior to release the application in the production. Once the issues are identified and prioritized for mitigation based upon the risk severity of the vulnerability, the next step is to determine how to fix the vulnerability. This depends on factors such as the type of the vulnerability such as the security controls/measures that are affected by the vulnerability and where the vulnerability is most likely being introduced. This type of metrics allows the CISO to point to the root causes of vulnerabilities and present the case for remediation to the application development teams.

Metrics for Vulnerability Root Causes Identification

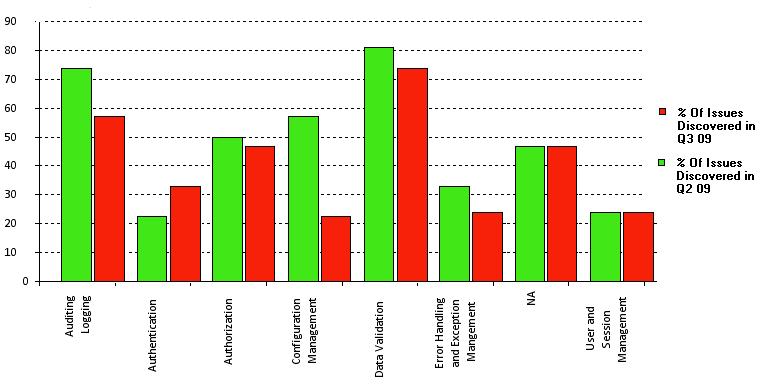

When the vulnerability metrics is reported as a trend, it allows the CISO to assess improvements. For example, in the case the same type of security issues are measures over time for the same type of application, it is possible for the CISO to point to potential root causes. For example, with trend vulnerability metrics and categorization of the type of vulnerabilities, it is possible for the CISO to make the case of investing in certain type of security activities such as process improvements, adoption of testing tools as well as training and awareness. For example, the metrics showed in figure 1 shows positive trends of certain type of vulnerabilities by comparing two quarterly releases of the same applications. Application security improvements measures as a reduced number of vulnerabilities identified from one quarterly release to another is observed for most vulnerability types except for authentication and user/session management issues.

The CISO might use this metrics to discuss with CIOs and development directors on whether the organization is getting better or worst over time in releasing more secure application software and to direct the application security resources (e.g. process, people and tools) where is most needed for reducing risks. With the metrics shown in Figure 1 for example, assuming the application changes introduced between releases do not differ much in term of type and complexity of the changes introduced as well as the number and the type of software developers in the development team and the tools used, a case can be made on focusing on the type of vulnerabilities that the organization is having trouble fixing such as better design and implementation of authentication and user/session management controls. The CISO might then coordinate with the CIO and the development directors to schedule a targeted training on this type of vulnerabilities, document development guides for authentication and session management and adopt specific security test cases. Ultimately this coordinated effort will empower software developers in designing, implementing and testing more secure authentication and session management controls and show these as improvements in the vulnerability metrics.

Metrics for Software Security Investments

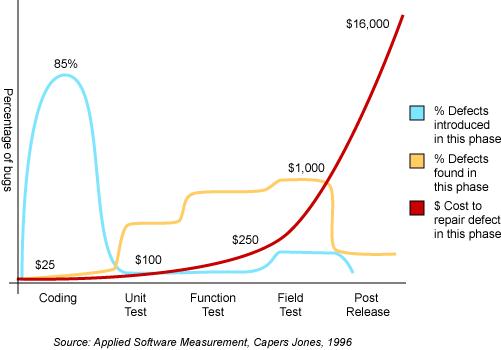

Another important aspect of the S-SDLC security metrics is to decide where in the SDLC to invest in security testing and remediation. To know this, it is important to measure in which phase of the SDLC the most of vulnerabilities (higher percentage of issues) originate, when these vulnerabilities are tested and how much cost to the organization to fix them in each phase of the SDLC. A sample metrics that measure this is shown in figure 2 based upon a case study on the costs of testing and managing software bugs (Ref Capers Jones Study).

A similar type of security defect management metrics can be used by the CISOs for managing security issues effectively by reducing overall security costs. Assuming the CISO has rolled out a security in the SDLC process and has budget allocated for investment in security in the SDLC activities such as secure coding training and secure code review process and static code analysis tools, this metrics allows the CISO to make that case for investing in testing and fixing security issues in the early phases of the SDLC. This is based upon the following measurements from this case study: 1) most of the vulnerabilities are introduced by software developers during coding, 2) the majority of these vulnerabilities are tested during field tests prior to production and 3) testing and fixing vulnerabilities late in the SDLC is the most inefficient way to do it since is approx. ten times more expensive to fix issues during pre-production tests than during unit tests. CISOs can use vulnerability case studies like these or use their own metrics to make the case for investing in secure software development activities since these will save the organization time and money.

PAGE BREAK PAGE BREAK PAGE BREAK PAGE BREAK PAGE BREAK

References

Verizon 2011 Data Breach Investigation Report: http://www.verizonbusiness.com/resources/reports/rp_data-breach-investigations-report-2011_en_xg.pdf

US Q2 2011 GDP Report Is Bad News for the US Tech Sector, But With Some Silver Linings: http://blogs.forrester.com/andrew_bartels/11-07-29-us_q2_2011_gdp_report_is_bad_news_for_the_us_tech_sector_but_with_some_silver_linings

Supplement to Authentication in an Internet Banking Environment: http://www.fdic.gov/news/news/press/2011/pr11111a.pdf

PCI-DSS: https://www.pcisecuritystandards.org/security_standards/index.php

OWASP Top Ten: https://www.owasp.org/index.php/Category:OWASP_Top_Ten_Project

Gartner teleconference on application security, Joseph Feiman, VP and Gartner Fellow http://www.gartner.com/it/content/760400/760421/ks_sd_oct.pdf

Identity Theft Survey Report, Federal Trade Commission,September, 2003: http://www.ftc.gov/os/2003/09/synovatereport.pdf

Dan E Geer Economics and Strategies of Data Security: http://www.verdasys.com/thoughtleadership/

Data Loss Database: http://datalossdb.org/

WHID, Web Hacking Incident Database: http://projects.webappsec.org/w/page/13246995/Web-Hacking-Incident-Database

Imperva's Web Application Attack Report: http://www.imperva.com/docs/HII_Web_Application_Attack_Report_Ed1.pdf

Albert Gonzalez data breach indictment: http://www.wired.com/images_blogs/threatlevel/2009/08/gonzalez.pdf

First Annual Cost of Cyber Crime Study Benchmark Study of U.S. Companies, Sponsored by ArcSight Independently conducted by Ponemon Institute LLC, July 2010: http://www.arcsight.com/collateral/whitepapers/Ponemon_Cost_of_Cyber_Crime_study_2010.pdf

2010 Annual Study: U.S. Cost of a Data Breach: http://www.symantec.com/content/en/us/about/media/pdfs/symantec_ponemon_data_breach_costs_report.pdf?om_ext_cid=biz_socmed_twitter_facebook_marketwire_linkedin_2011Mar_worldwide_costofdatabreach

Gordon, L.A. and Loeb, M.P. “The economics of information security investment”, ACM Transactions on Information and Systems Security, Vol.5, No.4, pp.438-457, 2002.

Total Cost of Ownership: http://en.wikipedia.org/wiki/Total_cost_of_ownership

Wes SonnenReich, Return of Security Investment, Practical Quantitative Model: http://www.infosecwriters.com/text_resources/pdf/ROSI-Practical_Model.pdf

Tangible ROI through Secure Software Engineering: http://www.mudynamics.com/assets/files/Tangible%20ROI%20Secure%20SW%20Engineering.pdf

The Privacy Dividend: the business case for investing in proactive privacy protection, Information Commissioner's Office, UK, 2009: http://www.ico.gov.uk/news/current_topics/privacy_dividend.aspx

Share prices and data breaches: http://www.securityninja.co.uk/data-loss/share-prices-and-data-breaches/

A commissioned study conducted by Forrester Consulting on behalf of VeriSign: DDoS: A Threat You Can’t Afford To Ignore: http://www.verisigninc.com/assets/whitepaper-ddos-threat-forrester.pdf

Sony data breach could be most expensive ever: http://www.csmonitor.com/Business/2011/0503/Sony-data-breach-could-be-most-expensive-ever

Health Net discloses loss of data to 1.9 million customers: http://www.computerworld.com/s/article/9214600/Health_Net_discloses_loss_of_data_to_1.9_million_customers

EMC spends $66 million to clean up RSA SecureID mess: http://www.infosecurity-us.com/view/19826/emc-spends-66-million-to-clean-up-rsa-secureid-mess/

Dmitri Alperovitch, Vice President, Threat Research, McAfee, Revealed: Operation Shady RAT: http://www.mcafee.com/us/resources/white-papers/wp-operation-shady-rat.pdf

OWASP Security Spending Benchmarks Project Report: https://www.owasp.org/images/b/b2/OWASP_SSB_Project_Report_March_2009.pdf

The Security Threat/Budget Paradox: http://www.verizonbusiness.com/Thinkforward/blog/?postid=164

Security and the Software Development Lifecycle: Secure at the Source, Aberdeen Group, 2011 http://www.aberdeen.com/Aberdeen-Library/6983/RA-software-development-lifecycle.aspx

State of Application Security - Immature Practices Fuel Inefficiencies, But Positive ROI Is Attainable, Forrester Consulting, 2011 http://www.microsoft.com/downloads/en/details.aspx?FamilyID=813810f9-2a8e-4cbf-bd8f-1b0aca7af61d&displaylang=en

PAGE BREAK PAGE BREAK PAGE BREAK PAGE BREAK PAGE BREAK

About OWASP

Short piece about OWASP and including links to Projects, ASVS, SAMM, Commercial Code of Conduct, Citations, ???

PAGE BREAK PAGE BREAK PAGE BREAK PAGE BREAK PAGE BREAK

Appendix

Appendix I-A: Value of Data & Cost of an Incident

The discussion of various information sources, which gives a single illustrative value for the main text

Value of Information

The selection of security measures must consider the value of asset being protected. Like personal data, all types of data can have value determined from a number of different perspectives. While it may be most common the look at the value of data by its value as an asset to the organization or the cost of an incident, these are neither always the most appropriate nor greatest valuations to consider. For example, a report looking at the value of personal data (personally identifiable information) suggests four perspectives from which personal information draws its privacy value. These are:

- its value as an asset used within the organization’s operations;

- its value to the individual to whom it relates;

- its value to other parties who might want to use the information, whether for legitimate or improper purposes;

- its societal value as interpreted by regulators and other groups.

The value to the subject of the data, to other parties or to society may be more appropriate for some organizations than others. The report also examines the wider consequences of not protecting (personal) data and the benefits of protection. It describes how incidents involving personal data that lead to financial fraud can have much larger impacts on individuals, but that financial effects are not the only impact. The report provides methods of calculation, and provides examples where the value of an individual's personal data record could be in the £500-£1,100 (approximately $800-$1,800) in 2008.

Data Breaches and Monetary Losses

Regarding the monetary loss per victim, exact figures vary depending on the factors that are considered to calculate them depending by the type of industry and the type of attack causing the data loss incident. According to a July 2010 study conducted by Ponemon Institute on 45 organizations of different industry sectors about the costs of cyber attacks, the costs of web-based attacks is 17% of the annualized cyber-attack costs. This cost varies across different industry sectors with the higher costs for defense, energy and financial services ($16.31 million, $15.63 million and $12.37 million respectively) than organization in retails, services and education.

Also according to the 2011 Ponemon Institute annual survey of data loss costs for U.S. companies, the average cost per compromised record in 2010 was $214 up 5% from 2009. According to this survey, the communication sector bear the highest cost of $380 per customer record with financial services the second highest cost of $353 followed by healthcare with $345, media, at $131, education at $112 and the public sector at $81.

The security company Symantec, which sponsored the report, developed with Ponemon Institute a data breach risk calculator that can be used to calculate the likelihood of data breach in the next 12 months, as well as to calculate the the average cost per breach and average cost per lost record.

The Ponemon institute direct costs estimates, are also used for estimating the direct cost of data breach incidents collected by OSF DataLossDB. 2009 direct cost figures of $60.00/record are multiplied by the number of records reported by each incident to obtain the monetary loss estimate. It is assumed that direct costs are suffered by the breached organizations while this is not always true such as in the case of credit card number breaches where the direct costs can often be suffered by banks and card issuers. Furthermore, estimate costs does not include indirect costs (e.g. time, effort and other organizational resources spent) as well as opportunity costs (e.g. the cost resulting from lost business opportunities because of reputation damage).

Another possible way to make a risk management decision on whether to mitigate a potential loss is to determine if the company will be legally liable for that data loss. By using the definition of legal liability from a U.S. liability case law, given as Probability (P) of the loss, (L) the amount of the Loss, then there is liability whenever the cost of adequate precautions or the Burden (B) to the company is:

B < P x L

By applying this formula to 2003 data from the the Federal Trade Commission (FTC) for example, the probability of the loss is 4.6% as the amount of the population that suffered identity fraud while the amount of the loss x victim can be calculated by factoring how much money was spent to recover from the loss considering the time spent was 300 million hours at the hourly wages of $ 5.25/hr plus out of pocket expenses of $ 5 billion:

L = [Time Spent x Recover From Loss x Hourly Wage + Out Of Pocket Expenses]/Number of Victims

With this formula for calculating the amount of loss due to an identity fraud incident, based upon 2003 FTC data, the loss per customer/victim is approximately $ 655 dollars and the burden imposed to the company is $ 30.11 per customer/victim per incident.

The risk management decision is then to decide to whether it is possible to protect a customer for $ 30.11 per customer per annum. If it is, then liability is found and there is liability risk for the company. This calculation can be useful to determine the potential liability risk in case of data loss incidents, for example by applying the FTC figures to the TJX Inc. incident of 2007 where it was initially announced the exposure of confidential information of 45,700,000 customers, the exposure to the incident for the victims involved could be calculated as:

Cost exposure to the incident = Number of victims exposed by the incident x loss per victim

With this formula using TJX Inc data or number of victims affected and by applying the loss per victim using FTC data, the cost of the incident that represents the loss potential is $ 30 Billion. By factoring this with the probability of the incident occurring, then it is possible to determine how much money should be spend in security measures. In the case of TJX Inc incident for example, assuming a 1 in 1000 chance of occurrence a $ 30 Million security program for TJX Inc would have been justifiable.

Summary

We can see that there are different ways to determine the value of information and the that some of these are purely based on the costs relating to data breaches. But overall, the references suggest that typically individual's data can be valued in the range $500 to $2,000 per record.

PAGE BREAK PAGE BREAK PAGE BREAK PAGE BREAK PAGE BREAK

Appendix I-B: Calculation Sheets

Some grids for CISOs to enter their own numbers and calculations

PAGE BREAK PAGE BREAK PAGE BREAK PAGE BREAK PAGE BREAK

Appendix I-C: Online Calculator

A calculator for estimating the cost incurred by organizations, across industry sectors, after experiencing a data breach is provided by Symantec based upon data surveys of the Ponemon institute: https://databreachcalculator.com/

PAGE BREAK PAGE BREAK PAGE BREAK PAGE BREAK PAGE BREAK

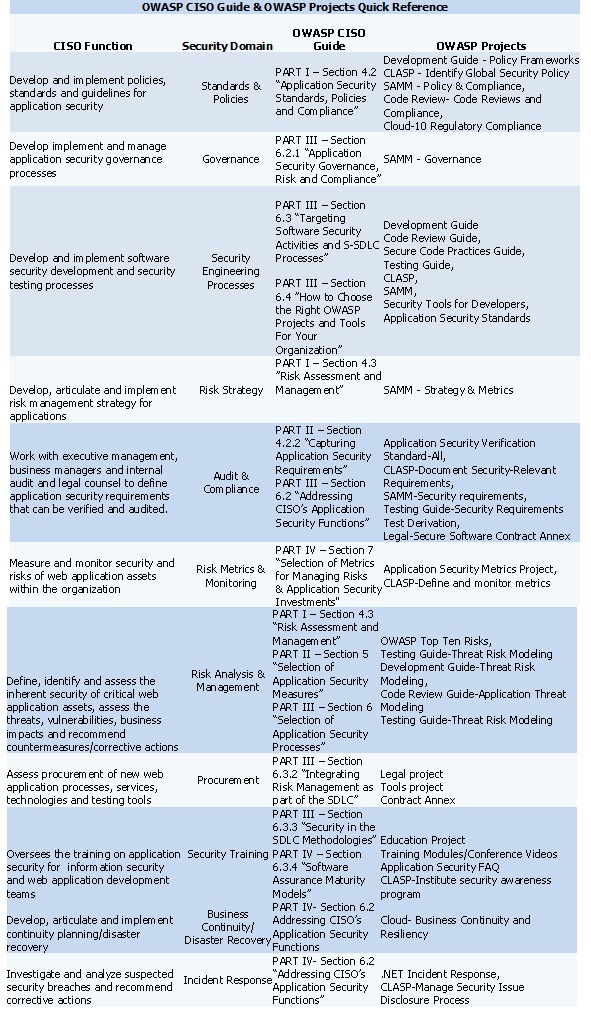

Appendix I-D: Quick CISO Reference to OWASP's Guide & OWASP Projects

Included herein is a quick reference to the the guide. The quick reference maps typical CISO's functions and information security domains to different sections of the guide and relevant OWASP projects.

This article is a stub. You can help OWASP by expanding it or discussing it on its Talk page.