This site is the archived OWASP Foundation Wiki and is no longer accepting Account Requests.

To view the new OWASP Foundation website, please visit https://owasp.org

Difference between revisions of "Benchmark"

(→Headers as a Source of Attack for XSS) |

(→Test Cases) |

||

| Line 187: | Line 187: | ||

Version 1.0 of the Benchmark was published on April 15, 2015 and had 20,983 test cases. On May 23, 2015, version 1.1 of the Benchmark was released. The 1.1 release improves on the previous version by making sure that there are both true positives and false positives in every vulnerability area. Version 1.2beta was released on August 15, 2015. | Version 1.0 of the Benchmark was published on April 15, 2015 and had 20,983 test cases. On May 23, 2015, version 1.1 of the Benchmark was released. The 1.1 release improves on the previous version by making sure that there are both true positives and false positives in every vulnerability area. Version 1.2beta was released on August 15, 2015. | ||

| − | Version 1. | + | Version 1.2beta and forward of the Benchmark is a fully executable web application, which means it is scannable by any kind of vulnerability detection tool. The 1.2beta has been limited to slightly less than 3,000 test cases, to make it easier for DAST tools to scan it (so it doesn't take so long and they don't run out of memory, or blow up the size of their database). The final 1.2 release is expected to be the same size. The 1.2beta release covers the same vulnerability areas that 1.1 covers. We added a few Spring database SQL Injection tests, but thats it. The bulk of the work was turning each test case into something that actually runs correctly AND is fully exploitable, and then generating a UI on top of it that works in order to turn the test cases into a real running application. |

Given 1.2beta is temporary, we aren't updating the chart below. You can still download the version 1.1 release of the Benchmark by cloning the release marked with the GIT tag '1.1'. | Given 1.2beta is temporary, we aren't updating the chart below. You can still download the version 1.1 release of the Benchmark by cloning the release marked with the GIT tag '1.1'. | ||

| Line 196: | Line 196: | ||

|- | |- | ||

! Vulnerability Area | ! Vulnerability Area | ||

| − | ! | + | ! # of Tests in v1.1 |

| + | ! # of Tests in v1.2 | ||

! CWE Number | ! CWE Number | ||

|- | |- | ||

| [[Command Injection]] | | [[Command Injection]] | ||

| 2708 | | 2708 | ||

| + | | 251 | ||

| [https://cwe.mitre.org/data/definitions/78.html 78] | | [https://cwe.mitre.org/data/definitions/78.html 78] | ||

|- | |- | ||

| Weak Cryptography | | Weak Cryptography | ||

| 1440 | | 1440 | ||

| + | | 246 | ||

| [https://cwe.mitre.org/data/definitions/327.html 327] | | [https://cwe.mitre.org/data/definitions/327.html 327] | ||

|- | |- | ||

| Weak Hashing | | Weak Hashing | ||

| 1421 | | 1421 | ||

| + | | 236 | ||

| [https://cwe.mitre.org/data/definitions/328.html 328] | | [https://cwe.mitre.org/data/definitions/328.html 328] | ||

|- | |- | ||

| [[LDAP injection | LDAP Injection]] | | [[LDAP injection | LDAP Injection]] | ||

| 736 | | 736 | ||

| + | | 59 | ||

| [https://cwe.mitre.org/data/definitions/90.html 90] | | [https://cwe.mitre.org/data/definitions/90.html 90] | ||

|- | |- | ||

| [[Path Traversal]] | | [[Path Traversal]] | ||

| 2630 | | 2630 | ||

| + | | 268 | ||

| [https://cwe.mitre.org/data/definitions/22.html 22] | | [https://cwe.mitre.org/data/definitions/22.html 22] | ||

|- | |- | ||

| Secure Cookie Flag | | Secure Cookie Flag | ||

| 416 | | 416 | ||

| + | | 67 | ||

| [https://cwe.mitre.org/data/definitions/614.html 614] | | [https://cwe.mitre.org/data/definitions/614.html 614] | ||

|- | |- | ||

| [[SQL Injection]] | | [[SQL Injection]] | ||

| 3529 | | 3529 | ||

| + | | 504 | ||

| [https://cwe.mitre.org/data/definitions/89.html 89] | | [https://cwe.mitre.org/data/definitions/89.html 89] | ||

|- | |- | ||

| [[Trust Boundary Violation]] | | [[Trust Boundary Violation]] | ||

| 725 | | 725 | ||

| + | | 126 | ||

| [https://cwe.mitre.org/data/definitions/501.html 501] | | [https://cwe.mitre.org/data/definitions/501.html 501] | ||

|- | |- | ||

| Weak Randomness | | Weak Randomness | ||

| 3640 | | 3640 | ||

| + | | 493 | ||

| [https://cwe.mitre.org/data/definitions/330.html 330] | | [https://cwe.mitre.org/data/definitions/330.html 330] | ||

|- | |- | ||

| [[XPATH Injection]] | | [[XPATH Injection]] | ||

| 347 | | 347 | ||

| + | | 35 | ||

| [https://cwe.mitre.org/data/definitions/643.html 643] | | [https://cwe.mitre.org/data/definitions/643.html 643] | ||

|- | |- | ||

| [[XSS]] (Cross-Site Scripting) | | [[XSS]] (Cross-Site Scripting) | ||

| 3449 | | 3449 | ||

| + | | 455 | ||

| [https://cwe.mitre.org/data/definitions/79.html 79] | | [https://cwe.mitre.org/data/definitions/79.html 79] | ||

|- | |- | ||

| Total Test Cases | | Total Test Cases | ||

| 21,041 | | 21,041 | ||

| + | | 2,740 | ||

|} | |} | ||

| Line 253: | Line 266: | ||

* either a true vulnerability or a false positive for a single issue | * either a true vulnerability or a false positive for a single issue | ||

| − | The | + | The Benchmark is intended to help determine how well analysis tools correctly analyze a broad array of application and framework behavior, including: |

* HTTP request and response problems | * HTTP request and response problems | ||

Revision as of 23:50, 28 April 2016

- Main

- Test Cases

- Test Case Details

- Tool Support/Results

- Quick Start

- Tool Scanning Tips

- RoadMap

- FAQ

- Acknowledgements

OWASP Benchmark ProjectThe OWASP Benchmark for Security Automation (OWASP Benchmark) is a free and open test suite designed to evaluate the speed, coverage, and accuracy of automated software vulnerability detection tools and services (henceforth simply referred to as 'tools'). Without the ability to measure these tools, it is difficult to understand their strengths and weaknesses, and compare them to each other. The OWASP Benchmark contains over 20,000 test cases that are fully runnable and exploitable, each of which maps to the appropriate CWE number for that vulnerability. You can use the OWASP Benchmark with Static Application Security Testing (SAST) tools, Dynamic Application Security Testing (DAST) tools like OWASP ZAP and Interactive Application Security Testing (IAST) tools. The current version of the Benchmark is implemented in Java. Future versions may expand to include other languages. Benchmark Project Scoring PhilosophySecurity tools (SAST, DAST, and IAST) are amazing when they find a complex vulnerability in your code. But with widespread misunderstanding of the specific vulnerabilities automated tools cover, end users are often left with a false sense of security. We are on a quest to measure just how good these tools are at discovering and properly diagnosing security problems in applications. We rely on the long history of military and medical evaluation of detection technology as a foundation for our research. Therefore, the test suite tests both real and fake vulnerabilities. There are four possible test outcomes in the Benchmark:

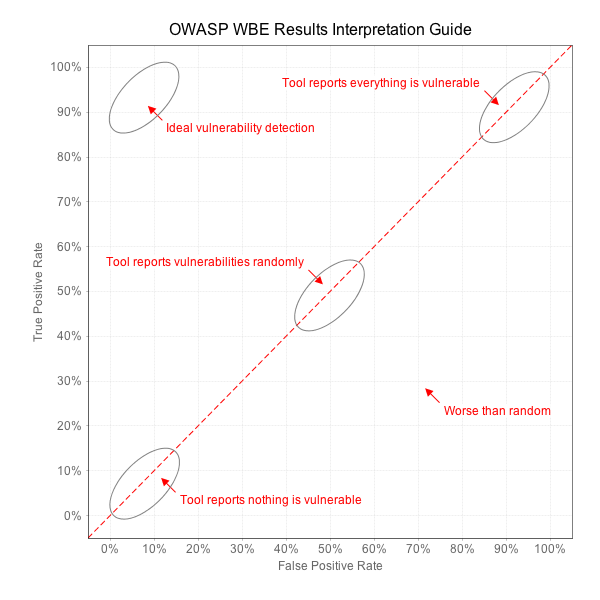

We can learn a lot about a tool from these four metrics. Consider a tool that simply flags every line of code as vulnerable. This tool will perfectly identify all vulnerabilities! But it will also have 100% false positives and thus adds no value. Similarly, consider a tool that reports absolutely nothing. This tool will have zero false positives, but will also identify zero real vulnerabilities and is also worthless. You can even imagine a tool that flips a coin to decide whether to report whether each test case contains a vulnerability. The result would be 50% true positives and 50% false positives. We need a way to distinguish valuable security tools from these trivial ones. If you imagine the line that connects all these points, from 0,0 to 100,100 establishes a line that roughly translates to "random guessing." The ultimate measure of a security tool is how much better it can do than this line. The diagram below shows how we will evaluate security tools against the Benchmark. A point plotted on this chart provides a visual indication of how well a tool did considering both the True Positives the tool reported, as well as the False Positives it reported. We also want to compute an individual score for that point in the range 0 - 100, which we call the Benchmark Accuracy Score. The Benchmark Accuracy Score is essentially a Youden Index, which is a standard way of summarizing the accuracy of a set of tests. Youden's index is one of the oldest measures for diagnostic accuracy. It is also a global measure of a test performance, used for the evaluation of overall discriminative power of a diagnostic procedure and for comparison of this test with other tests. Youden's index is calculated by deducting 1 from the sum of a test’s sensitivity and specificity expressed not as percentage but as a part of a whole number: (sensitivity + specificity) – 1. For a test with poor diagnostic accuracy, Youden's index equals 0, and in a perfect test Youden's index equals 1. So for example, if a tool has a True Positive Rate (TPR) of .98 (i.e., 98%) and False Positive Rate (FPR) of .05 (i.e., 5%) Sensitivity = TPR (.98) Specificity = 1-FPR (.95) So the Youden Index is (.98+.95) - 1 = .93 And this would equate to a Benchmark score of 93 (since we normalize this to the range 0 - 100) On the graph, the Benchmark Score is the length of the line from the point down to the diagonal “guessing” line. Note that a Benchmark score can actually be negative if the point is below the line. This is caused when the False Positive Rate is actually higher than the True Positive Rate. Benchmark ValidityThe Benchmark tests are not exactly like real applications. The tests are derived from coding patterns observed in real applications, but the majority of them are considerably simpler than real applications. That is, most real world applications will be considerably harder to successfully analyze than the OWASP Benchmark Test Suite. Although the tests are based on real code, it is possible that some tests may have coding patterns that don't occur frequently in real code. Remember, we are trying to test the capabilities of the tools and make them explicit, so that users can make informed decisions about what tools to use, how to use them, and what results to expect. This is exactly aligned with the OWASP mission to make application security visible. Generating Benchmark ScoresAnyone can use this Benchmark to evaluate vulnerability detection tools. The basic steps are:

That's it! Full details on how to do this are at the bottom of the page on the Quick_Start tab. We encourage both vendors, open source tools, and end users to verify their application security tools against the Benchmark. In order to ensure that the results are fair and useful, we ask that you follow a few simple rules when publishing results. We won't recognize any results that aren't easily reproducible:

Reporting FormatThe Benchmark includes tools to interpret raw tool output, compare it to the expected results, and generate summary charts and graphs. We use the following table format in order to capture all the information generated during the evaluation.

Code Repo and Build/Run InstructionsSee the Getting Started and Getting, Building, and Running the Benchmark sections on the Quick Start tab. LicensingThe OWASP Benchmark is free to use under the GNU General Public License v2.0. Mailing ListProject LeadersProject References

Related Projects |

Quick DownloadAll test code and project files can be downloaded from OWASP GitHub. Project Intro VideoNews and Events

Classifications

| ||||||||||||||||||||||||||||||||||||||||||||||||||||

Version 1.0 of the Benchmark was published on April 15, 2015 and had 20,983 test cases. On May 23, 2015, version 1.1 of the Benchmark was released. The 1.1 release improves on the previous version by making sure that there are both true positives and false positives in every vulnerability area. Version 1.2beta was released on August 15, 2015.

Version 1.2beta and forward of the Benchmark is a fully executable web application, which means it is scannable by any kind of vulnerability detection tool. The 1.2beta has been limited to slightly less than 3,000 test cases, to make it easier for DAST tools to scan it (so it doesn't take so long and they don't run out of memory, or blow up the size of their database). The final 1.2 release is expected to be the same size. The 1.2beta release covers the same vulnerability areas that 1.1 covers. We added a few Spring database SQL Injection tests, but thats it. The bulk of the work was turning each test case into something that actually runs correctly AND is fully exploitable, and then generating a UI on top of it that works in order to turn the test cases into a real running application.

Given 1.2beta is temporary, we aren't updating the chart below. You can still download the version 1.1 release of the Benchmark by cloning the release marked with the GIT tag '1.1'.

The test case areas and quantities for the 1.1 release are:

| Vulnerability Area | # of Tests in v1.1 | # of Tests in v1.2 | CWE Number |

|---|---|---|---|

| Command Injection | 2708 | 251 | 78 |

| Weak Cryptography | 1440 | 246 | 327 |

| Weak Hashing | 1421 | 236 | 328 |

| LDAP Injection | 736 | 59 | 90 |

| Path Traversal | 2630 | 268 | 22 |

| Secure Cookie Flag | 416 | 67 | 614 |

| SQL Injection | 3529 | 504 | 89 |

| Trust Boundary Violation | 725 | 126 | 501 |

| Weak Randomness | 3640 | 493 | 330 |

| XPATH Injection | 347 | 35 | 643 |

| XSS (Cross-Site Scripting) | 3449 | 455 | 79 |

| Total Test Cases | 21,041 | 2,740 |

Each Benchmark version comes with a spreadsheet that lists every test case, the vulnerability category, the CWE number, and the expected result (true finding/false positive). Look for the file: expectedresults-VERSION#.csv in the project root directory.

Every test case is:

- a servlet or JSP (currently they are all servlets, but we plan to add JSPs)

- either a true vulnerability or a false positive for a single issue

The Benchmark is intended to help determine how well analysis tools correctly analyze a broad array of application and framework behavior, including:

- HTTP request and response problems

- Simple and complex data flow

- Simple and complex control flow

- Popular frameworks

- Inversion of control

- Reflection

- Class loading

- Annotations

- Popular UI technologies (particularly JavaScript frameworks)

Not all of these are yet tested by the Benchmark but future enhancements intend to provide more coverage of these issues.

Additional future enhancements could cover:

- All vulnerability types in the OWASP Top 10

- Does the tool find flaws in libraries?

- Does the tool find flaws spanning custom code and libraries?

- Does tool handle web services? REST, XML, GWT, etc…

- Does tool work with different app servers? Java platforms?

Example Test Case

Each test case is a simple Java EE servlet. BenchmarkTest00001 in version 1.0 of the Benchmark was an LDAP Injection test with the following metadata in the accompanying BenchmarkTest00001.xml file:

<test-metadata> <category>ldapi</category> <test-number>00001</test-number> <vulnerability>true</vulnerability> <cwe>90</cwe> </test-metadata>

BenchmarkTest00001.java in the OWASP Benchmark 1.0 simply reads in all the cookie values, looks for a cookie named "foo", and uses the value of this cookie when performing an LDAP query. Here's the code for BenchmarkTest00001.java:

package org.owasp.benchmark.testcode;

import java.io.IOException;

import javax.servlet.ServletException;

import javax.servlet.annotation.WebServlet;

import javax.servlet.http.HttpServlet;

import javax.servlet.http.HttpServletRequest;

import javax.servlet.http.HttpServletResponse;

@WebServlet("/BenchmarkTest00001")

public class BenchmarkTest00001 extends HttpServlet {

private static final long serialVersionUID = 1L;

@Override

public void doGet(HttpServletRequest request, HttpServletResponse response) throws ServletException, IOException {

doPost(request, response);

}

@Override

public void doPost(HttpServletRequest request, HttpServletResponse response) throws ServletException, IOException {

// some code

javax.servlet.http.Cookie[] cookies = request.getCookies();

String param = null;

boolean foundit = false;

if (cookies != null) {

for (javax.servlet.http.Cookie cookie : cookies) {

if (cookie.getName().equals("foo")) {

param = cookie.getValue();

foundit = true;

}

}

if (!foundit) {

// no cookie found in collection

param = "";

}

} else {

// no cookies

param = "";

}

try {

javax.naming.directory.DirContext dc = org.owasp.benchmark.helpers.Utils.getDirContext();

Object[] filterArgs = {"a","b"};

dc.search("name", param, filterArgs, new javax.naming.directory.SearchControls());

} catch (javax.naming.NamingException e) {

throw new ServletException(e);

}

}

}

The following describes situations in the Benchmark that have come up for debate as to the validity/accuracy of the test cases in these scenarios.

Cookies as a Source of Attack for XSS

Benchmark v1.1 and early versions of the 1.2beta included test cases that used cookies as a source of data that flowed into XSS vulnerabilities. The Benchmark treated these tests as False Positives because the Benchmark team figured that you'd have to use an XSS vulnerability in the first place to set the cookie value, and so it wasn't fair/reasonable to consider an XSS vulnerability whose data source was a cookie value as actually exploitable. However, we got feedback from some tool vendors, like Fortify, Burp, and Arachni, that they disagreed with this analysis and felt that, in fact, cookies were a valid source of attack against XSS vulnerabilities. Given that there are good arguments on both sides of this safe vs. unsafe question, we decided on Aug 25, 2015 to simply remove those test cases from the Benchmark. If, in the future, we decide who is right, we may add such test cases back in.

Headers as a Source of Attack for XSS

Similarly, the Benchmark team believes that the names of headers aren't a valid source of XSS attack for the same reason we thought cookie values aren't a valid source. Because it would require an XSS vulnerability to be exploited in the first place to set them. In fact, we feel that this argument is much stronger for header names, than cookie values. Right now, the Benchmark doesn't include any header names as sources for XSS test cases, but we plan to add them, and mark them as false positive in the Benchmark.

We do have header values as sources for some XSS test cases in the Benchmark and only 'referer' is treated as a valid XSS source (i.e., true positives) because other headers are not viable XSS sources. Other headers are, of course, valid sources for other attack vectors, like SQL injection or Command Injection.

False Positive Scenario: Static Values Passed to Unsafe (Weak) Sinks

The Benchmark has MANY test cases where unsafe data flows in from the browser, but that data is replaced with static content as it goes through the propagators in the that specific test case. This static (safe) data then flows to the sink, which may be a weak/unsafe sink, like, for example, an unsafely constructed SQL statement. The Benchmark treats those test cases as false positives because there is absolutely no way for that weakness to be exploited. The NSA Juliet SAST benchmark treats such test cases exactly the same way, as false positives. We do recognize that there are weaknesses in those test cases, even though they aren't exploitable.

Some SAST tool vendors feel it is appropriate to point out those weaknesses, and that's fine. However, if the tool points those weaknesses out, and does not distinguish them from truly exploitable vulnerabilities, then the Benchmark treats those findings as false positives. If the tool allows a user to differentiate these non-exploitable weaknesses from exploitable vulnerabilities, then the Benchmark scorecard generator can use that information to filter out these extra findings (along with any other similarly marked findings) so they don't count against that tool when calculating that tool's Benchmark score. In the real world, its important for analysts to be able to filter out such findings if they only have time to deal with the most critical, actually exploitable, vulnerabilities. If a tool doesn't make it easy for an analyst to distinguish the two situations, then they are providing a disservice to the analyst.

This issue doesn't affect DAST tools. They only report what appears to be exploitable to them. So this has no affect on them.

If you are a SAST tool vendor or user, and you believe the Benchmark scorecard generator is counting such findings against that tool, and there is a way to tell them apart, please let the project team know so the scorecard generator can be adjusted to not count those findings against the tool. The Benchmark project's goal is the generate the most fair and accurate results it can generate. If such an adjustment is made to how a scorecard is generated for that tool, we plan to document this was done for that tool, and explain how others could perform the same filtering within that tool in order to get the same focused set of results.

Dead Code

Some SAST tools point out weaknesses in dead code because they might eventually end up being used, and serve as bad coding examples (think cut/paste of code). We think this is fine/appropriate. However, there is no dead code in the OWASP Benchmark (at least not intentionally). So dead code should not be causing any tool to report unnecessary false positives.

The results for 5 free tools, PMD, FIndBugs, FindBugs with the FindSecBugs plugin, SonarQube and OWASP ZAP are now available here against version 1.2beta of the Benchmark: https://rawgit.com/OWASP/Benchmark/master/scorecard/OWASP_Benchmark_Home.html

We have computed results for all the following tools, but are prohibited from publicly releasing the results for any commercial tools due to their licensing restrictions against such publication. However, we have the ability to compute an average set of results for commercial tools, and the above scorecard includes a 'Commercial Average' page, which includes a summary of results for 6 commercial SAST tools. It is the last item in the Tools menu.

The Benchmark can generate results for the following tools:

Free Static Application Security Testing (SAST) Tools:

- PMD (which really has no security rules) - .xml results file

- Findbugs - .xml results file

- FindBugs with the FindSecurityBugs plugin - .xml results file

- SonarQube - .xml results file

- XANITIZER - (Requires registration to download) - xml results file

Note: We looked into supporting Checkstyle and Error Prone but neither of these free static analysis tools have any security rules, so they would both score all zeroes, just like PMD.

Commercial SAST Tools:

- Checkmarx CxSAST - .xml results file

- Coverity Code Advisor (On-Demand and stand-alone versions) - .json results file

- HP Fortify (On-Demand and stand-alone versions) - .fpr results file

- IBM AppScan Source - .ozasmt results file

- Parasoft Jtest - .xml results file

- SourceMeter - .txt results file of ALL results from VulnerabilityHunter

- Veracode SAST - .xml results file

We are looking for results for other commercial static analysis tools like: Grammatech CodeSonar, Klocwork, etc. If you have a license for any static analysis tool not already listed above and can run it on the Benchmark and send us the results file that would be very helpful.

The free SAST tools come bundled with the Benchmark so you can run them yourselves. If you have a license for any commercial SAST tool, you can also run them against the Benchmark. Just put your results files in the /results folder of the project, and then run the BenchmarkScore script for your platform (.sh / .bat) and it will generate a scorecard in the /scorecard directory for all the tools you have results for that are currently supported.

Free Dynamic Application Security Testing (DAST) Tools:

Note: While we support scorecard generators for these Free and Commercial DAST tools, we haven't been able to get a full/clean run against the Benchmark from most of these tools. As such, some of these scorecard generators might still need some work to properly reflect their results. If you notice any problems, let us know.

- Arachni - .xml results file

- OWASP ZAP - .xml results file - ZAP can't do a scan against the full Benchmark yet, but the next weekly build is supposed to be able to. (Stay tuned!)

Commercial DAST Tools:

- Acunetix Web Vulnerability Scanner (WVS) - .xml results file (Generated using command line interface /ExportXML switch)

- Burp Pro - .xml results file

- You must use Burp Pro v1.6.30 or greater to scan the Benchmark due to a previous limitation in Burp Pro related to ensuring the path attribute for cookies was honored. This issue was fixed in the v1.6.30 release.

- HP WebInspect - .xml results file

- IBM AppScan - .xml results file

- Rapid7 AppSpider - .xml results file

- Qualys - We ran Qualys against the 1.2beta of the Benchmark and it found none of the vulnerabilities we test for as far as we can tell. So we haven't implemented a scorecard generator for it. If you get results where you think it does find some real issues, send us the results file and, if confirmed, we'll produce a scorecard generator for it.

If you have access to other DAST Tools, PLEASE RUN THEM FOR US against the Benchmark, and send us the results file so we can build a scorecard generator for that tool.

Commercial Interactive Application Security Testing (IAST) Tools:

- Contrast - .zip results file

WARNING: If you generate results for a commercial tool, be careful who you distribute it to. Each tool has its own license defining when any results it produces can be released/made public. It is likely to be against the terms of a commercial tool's license to publicly release that tool's score against the OWASP Benchmark. The OWASP Benchmark project takes no responsibility if someone else releases such results. It is for just this reason that the Benchmark project isn't releasing such results itself.

The project has automated test harnesses for these vulnerability detection tools, so we can repeatably run the tools against each version of the Benchmark and automatically produce scorecards in our desired format.

We want to test as many tools as possible against the Benchmark. If you are:

- A tool vendor and want to participate in the project

- Someone who wants to help score a free tool agains the project

- Someone who has a license to a commercial tool and the terms of the license allow you to publish tool results, and you want to participate

please let me know!

What is in the Benchmark?

The Benchmark is a Java Maven project. Its primary component is thousands of test cases (e.g., BenchmarkTest00001.java) , each of which is a single Java servlet that contains a single vulnerability (either a true positive or false positive). The vulnerabilities span about a dozen different types currently and are expected to expand significantly in the future.

An expectedresults.csv is published with each version of the Benchmark (e.g., expectedresults-1.1.csv) and it specifically lists the expected results for each test case. Here’s what the first two rows in this file looks like for version 1.1 of the Benchmark:

# test name category real vulnerability CWE Benchmark version: 1.1 2015-05-22 BenchmarkTest00001 crypto TRUE 327

This simply means that the first test case is a crypto test case (use of weak cryptographic algorithms), this is a real vulnerability (as opposed to a false positive), and this issue maps to CWE 327. It also indicates this expected results file is for Benchmark version 1.1 (produced May 22, 2015). There is a row in this file for each of the tens of thousands of test cases in the Benchmark. Each time a new version of the Benchmark is published, a new corresponding results file is generated and each test case can be completely different from one version to the next.

The Benchmark also comes with a bunch of different utilities, commands, and prepackaged open source security analysis tools, all of which can be executed through Maven goals, including:

- Open source vulnerability detection tools to be run against the Benchmark

- A scorecard generator, which computes a scorecard for each of the tools you have results files for.

What Can You Do With the Benchmark?

- Compile all the software in the Benchmark project (e.g., mvn compile)

- Run a static vulnerability analysis tool (SAST) against the Benchmark test case code

- Scan a running version of the Benchmark with a dynamic application security testing tool (DAST)

- Instructions on how to run it are provided below

- Generate scorecards for each of the tools you have results files for

- See the Tool Support/Results page for the list of tools the Benchmark supports generating scorecards for

Getting Started

Before downloading or using the Benchmark make sure you have the following installed and configured properly:

GIT: http://git-scm.com/ or https://github.com/ Maven: https://maven.apache.org/ (Version: 3.2.3 or newer works. We heard that 3.0.5 throws an error.) Java: http://www.oracle.com/technetwork/java/javase/downloads/index.html (Java 7 or 8) (64-bit) - Takes ALOT of memory to compile the Benchmark.

Getting, Building, and Running the Benchmark

To download and build everything:

$ git clone https://github.com/OWASP/benchmark $ cd benchmark $ mvn compile (This compiles it) $ runBenchmark.sh/.bat - This compiles and runs it.

Then navigate to: https://localhost:8443/benchmark/ to go to its home page. It uses a self signed SSL certificate, so you'll get a security warning when you hit the home page.

Note: We have set the Benchmark app to use up to 6 Gig of RAM, which it may need when it is fully scanned by a DAST scanner. The DAST tool probably also requires 3+ Gig of RAM. As such, we recommend having a 16 Gig machine if you are going to try to run a full DAST scan. And at least 4 or ideally 8 Gig if you are going to play around with the running Benchmark app.

Using a VM instead

We have several preconstructed VMs or instructions on how to build one that you can use instead:

- Docker: A Dockerfile is checked into the project here. This Docker file should automatically produce a Docker VM that has the latest and greatest version of the Benchmark project files.

- Virtualbox: A Virtualbox VM is available here. This VM has an early version of the Benchmark 1.2beta preinstalled. Make sure you do a 'git pull' in the Benchmark directory to download the latest of everything before using.

- Amazon Web Services (AWS) - Here's how you set up the Benchmark on an AWS VM:

sudo yum install git sudo yum install maven sudo yum install mvn sudo wget http://repos.fedorapeople.org/repos/dchen/apache-maven/epel-apache-maven.repo -O /etc/yum.repos.d/epel-apache-maven.repo sudo sed -i s/\$releasever/6/g /etc/yum.repos.d/epel-apache-maven.repo sudo yum install -y apache-maven git clone https://github.com/OWASP/benchmark cd benchmark chmod 755 *.sh ./runBenchmark.sh -- to run it locally on the VM. ./runRemoteAccessibleBenchmark.sh -- to run it so it can be accessed outside the VM (on port 8443).

Running Free Static Analysis Tools Against the Benchmark

There are scripts for running each of the free SAST vulnerability detection tools included with the Benchmark against the Benchmark test cases. On Linux, you might have to make them executable (e.g., chmod 755 *.sh) before you can run them.

Generating Test Results for PMD:

$ runPMD.sh (Linux) or runPMD.bat (Windows)

Generating Test Results for FindBugs:

$ runFindBugs.sh (Linux) or runFindBugs.bat (Windows)

Generating Test Results for FindBugs with the FindSecBugs plugin:

$ runFindSecBugs.sh (Linux) or runFindSecBugs.bat (Windows)

In each case, the script will generate a results file and put it in the /results directory. For example:

Benchmark_1.1-findbugs-v3.0.1-1026.xml

This results file name is carefully constructed to mean the following: It's a results file against the OWASP Benchmark version 1.1, FindBugs was the analysis tool, it was version 3.0.1 of FindBugs, and it took 1026 seconds to run the analysis.

NOTE: If you create a results file yourself, by running a commercial tool for example, you can add the version # and the compute time to the filename just like this and the Benchmark Scorecard generator will pick this information up and include it in the generated scorecard. If you don't, depending on what metadata is included in the tool results, the Scorecard generator might do this automatically anyway.

Generating Scorecards

The application BenchmarkScore is included with the Benchmark. It parses the output files generated by security tools run against the Benchmark and compares them against the expected results, and produces a set of web pages that detail the accuracy and speed of the tools involved.

The following command will compute a Benchmark scorecard for all the results files in the /results directory. The generated scorecard is put into the /scorecard directory.

createScorecard.sh (Linux) or createScorecard.bat (Windows)

An example of a real scorecard for some open source tools is provided at the top of the Tool Support/Results tab so you can see what one looks like.

We recommend including the Benchmark version number in any results file name, in order to help prevent mismatches between the expected results and the actual results files. A tool will not score well against the wrong expected results.

Customizing Your Scorecard Generation

The createScorecard scripts are very simple. They only have one line. This is what the 1.2beta version looks like:

mvn validate -Pbenchmarkscore -Dexec.args="expectedresults-1.2beta.csv results"

This Maven command simply says to run the benchmarkscore application, passing in two parameters. The 1st is the Benchmark expected results file to compare the tool results against. And the 2nd is the name of the directory that contains all the results from tools run against that version of the Benchmark. If you have tool results older than the current version of the Benchmark, like 1.1 results, for example, then you would do something like this instead:

mvn validate -Pbenchmarkscore -Dexec.args="expectedresults-1.1.csv 1.1_results"

To keep things organized, we actually put the expected results file inside the same results folder for that version of the Benchmark, so our command looks like this:

mvn validate -Pbenchmarkscore -Dexec.args="1.1_results/expectedresults-1.1.csv 1.1_results"

In all cases, the generated scorecard is put in the /scorecard folder.

WARNING: If you generate results for a commercial tool, be careful who you distribute it to. Each tool has its own license defining when any results it produces can be released/made public. It is likely to be against the terms of a commercial tool's license to publicly release that tool's score against the OWASP Benchmark. The OWASP Benchmark project takes no responsibility if someone else releases such results. It is for just this reason that the Benchmark project isn't releasing such results itself.

People frequently have difficulty scanning the Benchmark with various tools due to many reasons, including size of the Benchmark app and its codebase, and complexity of the tools used. Here is some guidance for some of the tools we have used to scan the Benchmark. If you've learned any tricks on how to get better or easier results for a particular tool against the Benchmark, let us know or update this page directly.

Generic Tips

Because of the size of the Benchmark, you may need to give your tool more memory before it starts the scan. If its a Java based tool, you may want to pass more memory to it like this:

-Xmx4G (This gives the Java application 4 Gig of memory)

SAST Tools

FindBugs

We include this free tool in the Benchmark and its all dialed in. Simply run the script runFindBugs.(sh or bat). If you want to run a different version of FindBugs, just change its version number in the Benchmark pom.xml file.

FindBugs with FindSecBugs

FindSecurityBugs is a great plugin for FindBugs that significantly increases the ability for FindBugs to find security issues. We include this free tool in the Benchmark and its all dialed in. Simply run the script runFindSecBugs.(sh or bat). If you want to run a different version of FindSecBugs, just change the version number of the findsecbugs-plugin artifact in the Benchmark pom.xml file.

HP Fortify

If you are using the Audit Workbench, you can give it more memory and make sure you invoke it in 64-bit mode by doing this:

set AWB_VM_OPTS="-Xmx2G -XX:MaxPermSize=256m" export AWB_VM_OPTS="-Xmx2G -XX:MaxPermSize=256m" auditworkbench -64

We found it was easier to use the Maven support in Fortify to scan the Benchmark and to do it in 2 phases, translate, and then scan. We did something like this:

Translate Phase: export JAVA_HOME=$(/usr/libexec/java_home) export PATH=$PATH:/Applications/HP_Fortify/HP_Fortify_SCA_and_Apps_4.10/bin export SCA_VM_OPTS="-Xmx2G -version 1.7" mvn sca:clean mvn sca:translate

Scan Phase: export JAVA_HOME=$(/usr/libexec/java_home) export PATH=$PATH:/Applications/HP_Fortify/HP_Fortify_SCA_and_Apps_4.10/bin export SCA_VM_OPTS="-Xmx10G -version 1.7" mvn sca:scan

PMD

We include this free tool in the Benchmark and its all dialed in. Simply run the script runPMD.(sh or bat). If you want to run a different version of PMD, just change its version number in the Benchmark pom.xml file. (NOTE: PMD doesn't find any security issues. We include it because its interesting to know that it doesn't.)

SonarQube

We include this free tool in the Benchmark and its mostly dialed in. But its a bit tricky because SonarQube requires two parts. There is a stand alone scanner for Java. And then there is a web application that accepts the results, and in turn can then produce the results file required by the Benchmark scorecard generator for SonarQube. Running the script runSonarQube.(sh or bat) will generate the results, but if the SonarQube Web Application isn't running where the runSonarQube script expects it to be, then the script will fail.

If you want to run a different version of SonarQube, just change its version number in the Benchmark pom.xml file.

DAST Tools

Acunetix WVS

From the vendor:

The main settings to tweak in terms of improving coverage are the following:

1. WVS is setup by default to ignore files in a directory if there are more than 150 files. This setting can be tweaked from Configuration > Scan Settings > Crawling Options -> Maximum number of files in a directory. Set this to 15000 and it should now find all files in the Benchmark.

2. You also need to edit a setting that is not accessible via the GUI. By default, if WVS finds a lot of files with the same parameter name it starts to ignore them. You can change this by editing the settings.xml file (e.g., C:\ProgramData\Acunetix WVS 10\Data\General\settings.xml). Open it in a text editor and find the text 'InputLimitationHeuristics' and set Enabled= to 0.

Burp Pro

You must use Burp Pro v1.6.29 or greater to scan the Benchmark due to a previous limitation in Burp Pro related to ensuring the path attribute for cookies was honored. This issue was fixed in the v1.6.29 release.

To scan, first spider the entire Benchmark, and then select the /Benchmark URL and actively scan that branch. You can skip all the .html pages and any other pages that Burp says have no parameters.

OWASP ZAP

ZAP 2.4.2 and earlier requires additional memory and disk size for its database to be able to scan the Benchmark. The Benchmark project requested this be easier to configure so they did two things:

- The made the default database size bigger.

- They made it so you can configure the amount of memory via the GUI so when you restart ZAP it uses more memory as configured.

- Tools --> Options --> JVM: Recommend setting to: -Xmx2048m (or larger). (Don't forget to restart ZAP).

Both of these features are working in the weekly release dated 2015-09-28 (or later) so we strongly recommend using a recent weekly release of ZAP against the Benchmark because of these new features AND the fact that numerous issues in ZAP were fixed to make a ZAP scan of the Benchmark reasonably effective.

It is also recommended that you use both the traditional and Ajax Spiders, as well as the DOM XSS add-on (available from the ZAP marketplace). To do so:

To install the DOM XSS add-on:

- Help --> Check for Updates --> MarketPlace --> Alpha: DOM XSS Active Scanner Rule. (Check the box and hit 'Install Selected') (If you can't find it, it might already be installed.)

To run the two spiders first before doing the active scan:

- Click on Show All Tabs button (if 2 spider tab's aren't yet visible)

- Go to Spider tab (the black spider)

- Click on New Scan button

- Enter: https://localhost:8443/benchmark/ into the 'Starting Point' box and hit 'Start Scan'

- When Spider completes, click on 'benchmark' folder in Site Map, right click and select: 'Attack --> AJAX Spider'

- Hit 'Start Scan' in AJAX Spider dialog (Firefox required for this step)

- You should see Firefox pop up and it will navigate through the entire Benchmark.

- Now right-click on the 'benchmark' folder again and select: Attack --> Active Scan

- It will take several hours, like 3+ to complete

For faster active scan you can disabled ZAP DB log. At the moment you need to edit the file zapdb.script (in "db" directory) and change the line

- SET FILES LOG TRUE to: SET FILES LOG FALSE

IAST Tools

Interactive Application Security Testing (IAST) tools work differently than scanners. IAST tools monitor an application as it runs to identify application vulnerabilities using context from inside the running application. Typically these tools run continuously, immediately notifying users of vulnerabilities, but you can also get a full report of an entire application. To do this, we simply run the Benchmark application with an IAST agent and use a crawler to hit all the pages.

Contrast

To use Contrast, we simply add the agent to the Benchmark environment and run the BenchmarkCrawler. The entire process should only take a few minutes. We provided a few scripts, which simply add the -javaagent:contrast.jar flag to the Benchmark launch configuration. We have tested on MacOS, Ubuntu, and Windows. Be sure your VM has at least 4M of memory.

- Ensure your environment has Java, Maven, and git installed, then build the Benchmark project

$ git clone https://github.com/OWASP/Benchmark.git $ cd Benchmark $ mvn compile

- Download a licensed copy of the Contrast Agent (contrast.jar) from your Contrast TeamServer account and put it in the /Benchmark/tools/Contrast directory.

$ cp ~/Downloads/contrast.jar tools/Contrast

- In Terminal 1, launch the Benchmark application and wait until it starts

$ ./runBenchmark_wContrast.sh (.bat on Windows) [INFO] Scanning for projects... [INFO] [INFO] ------------------------------------------------------------------------ [INFO] Building OWASP Benchmark Project 1.2beta [INFO] ------------------------------------------------------------------------ [INFO] ... [INFO] [talledLocalContainer] Tomcat 8.x started on port [8443] [INFO] Press Ctrl-C to stop the container...

- In Terminal 2, launch the crawler and wait a minute or two for the crawl to complete.

$ ./runCrawler.sh (.bat on Windows)

- A Contrast report has been generated in /Benchmark/tools/Contrast/working/contrast.log. This report will be automatically copied (and renamed with version number) to /Benchmark/results directory.

$ more tools/Contrast/working/contrast.log 2016-04-22 12:29:29,716 [main b] INFO - Contrast Runtime Engine 2016-04-22 12:29:29,717 [main b] INFO - Copyright (C) 2012 2016-04-22 12:29:29,717 [main b] INFO - Pat. 8,458,789 B2 2016-04-22 12:29:29,717 [main b] INFO - Contrast Security, Inc. 2016-04-22 12:29:29,717 [main b] INFO - All Rights Reserved 2016-04-22 12:29:29,717 [main b] INFO - https://www.contrastsecurity.com/ ...

- Press Ctrl-C to stop the Benchmark in Terminal 1. Note: on Windows, select "N" when asked Terminate batch job (Y/N))

[INFO] [talledLocalContainer] Tomcat 8.x is stopped Copying Contrast report to results directory

- Generate scorecards in /Benchmark/scorecard

$ ./createScorecards.sh (.bat on Windows) Analyzing results from Benchmark_1.2beta-Contrast.log Actual results file generated: /Users/owasp/Projects/Benchmark/scorecard/Benchmark_v1.2beta_Scorecard_for_Contrast.csv Report written to: /Users/owasp/Projects/Benchmark/scorecard/Benchmark_v1.2beta_Scorecard_for_Contrast.html

- Open the Benchmark Scorecard in your browser

/Users/owasp/Projects/Benchmark/scorecard/Benchmark_v1.2beta_Scorecard_for_Contrast.html

Benchmark v1.0 - Released April 15, 2015 - This initial release included over 20,000 test cases in 11 different vulnerability categories. As this initial version was not a runnable application, it was only suitable for assessing static analysis tools (SAST).

Benchmark v1.1 - Released May 23, 2015 - This update fixed some inaccurate test cases, and made sure that every vulnerability area included both True Positives and False Positives.

Benchmark Scorecard Generator - Released July 10, 2015 - The ability to automatically and repeatably produce a scorecard of how well tools do against the Benchmark was released for most of the SAST tools supported by the Benchmark. Scorecards present graphical as well as statistical data on how well a tool does against the Benchmark down to the level of detail of how exactly it did against each individual test in the Benchmark. The latest public scorecards are available at: [1] Support for producing scorecards for additional tools is being added frequently and the current full set is documented on the Tool Support/Results and Quick Start tabs of this wiki.

Benchmark v1.2beta - Released Jul 10, 2015 - The 1st release of a fully runnable version of the Benchmark to support assessing all types of vulnerability detection and prevention technologies, including DAST, IAST, RASP, WAFs, etc. (This version has been updated with minor fixes numerous times since its initial release). This involved creating a user interface for every test case, and enhancing each test case to make sure its actually exploitable, not just uses something that is theoretically weak. This release is under 3,000 test cases to make it practical to scan the entire Benchmark with a DAST tool in a reasonable amount of time, with commodity hardware specs. The next release is expected to be larger, but we aren't sure how large. Maybe more like 5,000 or even 10,000 test cases.

Next Release --> Benchmark 1.2 - We are currently working with a number of DAST tool developers, getting their feedback on the size, accuracy, and other characteristics of the Benchmark and making agreed to improvements. Once we get a sufficient amount of performance and accuracy data we'll be able to decide what size the Benchmark should be to support DAST and SAST assessments and release a final 1.2 version. We'll then have to rerun all the tools against this version to get a baseline set of results to compare against each other.

Post Benchmark 1.2 steps:

While we don't have hard and fast rules of exactly what we are going to do next, enhancements in the following areas are goals for the project:

- Add new vulnerability categories

- Add support for popular server side Java frameworks (since the Benchmark is written in Java)

- Add support for popular client side JavaScript frameworks

- Add support for scorecard generation for additional tools (a continuous goal)

We also plan to do a bunch of data analysis on the results in order to see if we can learn and report some interesting things about the strengths and weaknesses of vulnerability analysis tools of all different types.

1. How are the scores computed for the Benchmark?

Each test case has a single vulnerability of a specific type. Its either a real vulnerability (True Positive) or not (a False Positive). We document all the test cases for each version of the Benchmark in the expectedresults-VERSION#.csv file (e.g., expectedresults-1.1.csv). This file lists the test case name, the CWE type of the vulnerability, and whether it is a True Positive or not. The Benchmark supports scorecard generators for computing exactly how a tool did when analyzing a version of the Benchmark. The full list of supported tools is on the Tools Support/Results tab. For each tool there is a parser that can parse the native results format for that tool (usually XML). This parser simply, for each test case, looks to see if that tool reported a vulnerability of the type expected in the test case source code file (for SAST) or the test case URL (for DAST/IAST). If it did, and the test case was a True Positive, the tool gets credit for finding it. If it is a False Positive test, and the tool reports that type of finding, then its recorded as a False Positive. If the tool didn't report that type of vulnerability for a test case, then they get either a False Negative, or a True Negative as appropriate. After calculating all of the individual test case results, a scorecard is generated providing a chart and statistics for that tool across all the vulnerability categories, and pages are also created comparing different tools to each other in each vulnerability category (if multiple tools are being scored together).

A detailed file explaining exactly how that tool did against each individual test case in that version of the Benchmark is produced as part of scorecard generation, and is available via the Actual Results link on each tool's scorecard page. (e.g., Benchmark_v1.1_Scorecard_for_FindBugs.csv).

2. What if the tool I'm using doesn't have a scorecard generator for it?

Send us the results file! We'll be happy to create a parser for that tool so its now supported.

3. What if a tool finds other unexpected vulnerabilities?

We are sure there are vulnerabilities we didn't intend to be there and we are eliminating them as we find them. If you find some, let us know and we'll fix them too. We are primarily focused on unintentional vulnerabilities in the categories of vulnerabilities the Benchmark currently supports, since that is what is actually measured.

Right now, two types of vulnerabilities that get reported are ignored by the scorecard generator:

- Vulnerabilities in categories not yet supported

- Vulnerabilities of a type that is supported, but reported in test cases not of that type

In the case of #2, false positives reported in unexpected areas are also ignored, which is primarily a DAST problem. Right now those false positives are completely ignored, but we are thinking about including them in the false positive score in some fashion. We just haven't decided how yet.

4. How should I configure my tool to scan the Benchmark?

All tools support various levels of configuration in order to improve their results. The Benchmark project, in general, is trying to compare out of the box capabilities of tools. However, if a few simple tweaks to a tool can be done to improve that tool's score, that's fine. We'd like to understand what those simple tweaks are, and document them here, so others can repeat those tests in exactly the same way. For example, just turn on the 'test cookies and headers' flag, which is off by default. Or turn on the 'advanced' scan, so it will work harder, find more vulnerabilities. Its simple things like this we are talking about, not an extensive effort to teach the tool about the app, or perform 'expert' configuration of the tool.

So, if you know of some simple tweaks to improve a tool's results, let us know what they are and we'll document them here so everyone can benefit and make it easier to do apples to apples comparisons. And we'll link to that guidance once we start documenting it, but we don't have any such guidance right now.

5. I'm having difficulty scanning the Benchmark with a DAST tool. How can I get it to work?

We've run into 2 primary issues giving DAST tools problems.

a) The Benchmark Generates Lots of Cookies

The Burp team pointed out a cookies bug in the 1.2beta Benchmark. Each Weak Randomness test case generates its own cookie, 1 per test case. This caused the creation of so many cookies that servers would eventually start returning 400 errors because there were simply too many cookies being submitted in a request. This was fixed in the Aug 27, 2015 update to the Benchmark by setting the path attribute for each of these cookies to be the path to that individual test case. Now, only at most one of these cookies should be submitted with each request, eliminating this 'too many cookies' problem. However, if a DAST tool doesn't honor this path attribute, it may continue to send too many cookies, making the Benchmark unscannable for that tool. Burp Pro prior to 1.6.29 had this issue, but it was fixed in the 1.6.29 release.

b) The Benchmark is a BIG Application

Yes. It is, so you might have to give your scanner more memory than it normally uses by default in order to successfully scan the entire Benchmark. Please consult your tool vendor's documentation on how to give it more memory.

Your machine itself might not have enough memory in the first place. For example, we were not able to successfully scan the 1.2beta with OWASP ZAP with only 8 Gig of RAM. So, you might need a more powerful machine or use a cloud provided machine to successfully scan the Benchmark with certain DAST tools. You may have similar problems with SAST tools against large versions of the Benchmark, like the 1.1 release.

The following people and organizations, and many others, have contributed to this project and their contributions are much appreciated!

- DHS - The U.S. Department of Homeland Security, for providing financial support to this important project.

- Juan Gama - Development of initial release and continued support

- Ken Prole - Assistance with automated scorecard development using CodeDx

- Nick Sanidas - Development of initial release

- Denim Group - Contribution of scan results to facilitate scorecard development

- Tasos Laskos - Significant feedback on the DAST version of the Benchmark.

- Ann Campbell - From SonarSource - for fixing our SonarQube results parser

- Lots of Vendors - Many vendors have provided us with either trial licenses we can use, or they have run their tools themselves and sent us results files. Many have also provided valuable feedback so we can make the Benchmark more accurate.

- The CWE project for providing a mapping mechanism to easily map test cases to issues found by vulnerability detection tools.

- The CWE project for providing a mapping mechanism to easily map test cases to issues found by vulnerability detection tools.

We are looking for volunteers. Please contact Dave Wichers if you are interested in contributing new test cases, tool results run against the benchmark, or anything else.