This site is the archived OWASP Foundation Wiki and is no longer accepting Account Requests.

To view the new OWASP Foundation website, please visit https://owasp.org

Difference between revisions of "Code Review and Static Analysis with tools"

(→Static Analysis Curriculum) |

|||

| Line 23: | Line 23: | ||

Vendors will need to provide tools license for the hands on sessions. Students will not have tool installation on their machine. They will be piloting copies of the tool in a remote VM image. This is NOT a competition. The purpose is NOT to compare tools, different source code will be picked for each vendor. This will mitigate side-by-side comparison of tool & findings. Vendors are not allowed to interfere with other vendors’ session or demo (Note: we have only 2 vendors: Fortify & Ounce Lab). Questions related to tool comparison between the present vendors are out of scope. Vendors are free to present features and particularities exclusive to their tools. | Vendors will need to provide tools license for the hands on sessions. Students will not have tool installation on their machine. They will be piloting copies of the tool in a remote VM image. This is NOT a competition. The purpose is NOT to compare tools, different source code will be picked for each vendor. This will mitigate side-by-side comparison of tool & findings. Vendors are not allowed to interfere with other vendors’ session or demo (Note: we have only 2 vendors: Fortify & Ounce Lab). Questions related to tool comparison between the present vendors are out of scope. Vendors are free to present features and particularities exclusive to their tools. | ||

| − | ====Session 1: Intro To Static Analysis, | + | ====Session 1: Intro To Static Analysis, May 7th 2009==== |

| − | Speaker: Dalci, Eric | + | *Speaker: Dalci, Eric |

| − | Time: 2 hours + open discussion | + | *Time: 2 hours + open discussion |

| − | + | *Classroom size: This is open. | |

| − | + | This presentation will give a taste about what Static Analysis is. | |

| − | Classroom size: This is open. | ||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| + | ====Session 2: Tool Assisted Code Reviews, July 30th 2009==== | ||

| + | *Speaker: Dalci, Eric, Bruce Mayhew (Ounce Lab) and Fortify (?) | ||

| + | *Time: 2hours and half | ||

| + | *Logistics: Hands on setup as in logistic section. | ||

| + | *Location: TBD | ||

| + | *Classroom size: 30 stations, 40 attendees max | ||

| − | + | This is an introduction course to two Static Analysis tools (Fortify SCA and Ounce Labs 6): | |

| − | + | * Fortify will demo its tool and scan Webgoat. | |

| − | + | * Ounce Lab will demo its tool, but we may scan a different project such as HacmeBank just to avoid tool comparison. | |

| − | + | * We will have an open discussion session after each demo for students to ask questions to the vendors. | |

| − | + | * Vendors should not interfere with each other’s session. Questions related to tool comparison will not be answered since this is not the goal of this session. | |

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| + | ====Session 3: Customization Lab (Fortify), August 13th 2009==== | ||

| + | *Speaker: Mike Ware | ||

| + | *Time: 3 hours | ||

| + | *Logistics: Hands on setup as in logistic section. | ||

| + | *Location: TBD | ||

| + | *Classroom size: 30 stations, 40 attendees max | ||

| + | *Prerequisite: Attended session 2 | ||

Mike will train the students on how to customize the Fortify Source Code Analyzer (SCA) | Mike will train the students on how to customize the Fortify Source Code Analyzer (SCA) | ||

Agenda (draft): | Agenda (draft): | ||

| − | + | * Approach to auditing scan results to determine true positives and false positives (.5 hours) | |

| − | + | * Custom rules (2 hours) | |

| − | + | ** Hands on examples of different rule types applied to code that resembles real business logic | |

| − | + | * Data flow sources and sinks [private data sent to custom logger, turn web service entry points into data flow rules?] | |

| − | + | * Data flow cleanse and pass through [cleanse: HTML escaping, pass through: third party library] | |

| − | + | * Semantic [use of a sensitive API [getEmployeeSSN()] | |

| − | + | * Structural [all Struts ActionForms must extend custom base ActionForm] | |

| − | + | * Configuration [properties: data.encryption = off] | |

| − | + | * Control flow [always call securityCheck() before downloadFile()] | |

| − | + | * Filtering (.5 hours) | |

| − | + | ** Prioritizing remediation efforts | |

| − | + | * Priority filters (e.g., P1, P2, etc) | |

| − | + | ** Isolating findings ("security controls" example) | |

| − | + | * Authentication | |

| − | + | * Authorization | |

| − | + | * Data validation | |

| − | + | * Session management | |

| − | + | * Etc. | |

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| + | ====Session 4: Customization Lab (Ounce Lab), August 27th 2009==== | ||

| + | *Speaker: Nabil Hannan | ||

| + | *Time: 3 hours | ||

| + | *Logistics: Hands on setup as in logistic section. | ||

| + | *Location: TBD | ||

| + | *Classroom size: 30 stations, 40 attendees max | ||

| + | *Prerequisite: Attended session 2 | ||

Nabil will train the students on how to customize the Ounce Labs 6 tool | Nabil will train the students on how to customize the Ounce Labs 6 tool | ||

Agenda (draft): | Agenda (draft): | ||

| − | + | * Approach to auditing scan results to determine true positives and false positives (.5 hours) | |

| − | + | * Custom rules (1.5 hours) | |

| − | + | ** Hands on examples of different rule types applied to code that resembles real business logic | |

| − | + | * Data flow sources and sinks [private data sent to custom logger] | |

| − | + | * Data flow cleanse [cleanse: HTML encoding] | |

| − | + | * Semantic [use of a sensitive API e.g. getEmployeeSSN()] | |

| − | + | * Filtering (.5 hours) | |

| − | + | ** Prioritizing remediation efforts | |

| − | + | * Understanding the Ounce Vulnerability Matrix | |

| − | + | * Modifying finding severity/category | |

| − | + | ** Isolating findings (using "bundles") | |

| − | + | * Input Validation | |

| − | + | * SQL Injection | |

| − | + | * Cross-Site Scripting | |

| − | + | * Etc. | |

| − | + | * Reporting (.5 hours) | |

| − | + | ** Demonstrate compliance with industry regulations and best practices | |

| − | + | * OWASP Top 10 | |

| − | + | * PCI | |

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| + | ====Session 5: Tool Adoption and Deployment, September 17th 2009==== | ||

| + | *Speaker: Shivang Trivedi | ||

| + | *Time: 2 hours | ||

| + | *Location: TBD | ||

| + | *Prerequisite: Preferably attended session 2, but not mandatory | ||

| + | *Classroom size: Open | ||

Shivang will talk about integration of a Static Analysis tool into the SDLC. | Shivang will talk about integration of a Static Analysis tool into the SDLC. | ||

| − | |||

Agenda (draft): | Agenda (draft): | ||

| − | + | * Tool Selection | |

| − | + | ** Flexible with Static Analysis and/or Penetration Testing | |

| − | + | ** Coverage | |

| − | + | ** Enterprise Support | |

| − | + | ** Quality of Security Findings | |

| − | + | * Phases of Integration | |

| − | + | ** Pre-requisites | |

| − | + | ** Goals and Challenges | |

| − | + | ** Distribution of Roles and Responsibilities | |

| − | + | ** Considering LOE | |

| − | + | * Model Per Activity | |

| − | + | ** Activity Flow | |

| − | + | ** Phase Transition | |

| − | + | * Deployment Model | |

| − | + | ** Advantages | |

| − | + | ** Disadvantages | |

| − | + | * Free and Handy Tools to | |

| − | + | ** Continuously Integrate | |

| − | + | ** Join activity flow | |

| − | + | * Improvements and Lessons Learned | |

| − | + | ** Effective use of tool’s capabilities | |

| − | + | ** Expanding Coverage | |

| − | + | ** Analysis Techniques | |

| − | + | ** Improving Results Accuracy | |

| − | |||

== Code Review and Static Analysis with tools == | == Code Review and Static Analysis with tools == | ||

Revision as of 07:45, 28 May 2009

Chapter: OWASP NoVA >> Knowledge

- 1 Static Analysis Curriculum

- 1.1 Logistics Preparation for hands on

- 1.1.1 Registration

- 1.1.2 Student’s prerequisites

- 1.1.3 Tool license and Vendor IP

- 1.1.4 Session 1: Intro To Static Analysis, May 7th 2009

- 1.1.5 Session 2: Tool Assisted Code Reviews, July 30th 2009

- 1.1.6 Session 3: Customization Lab (Fortify), August 13th 2009

- 1.1.7 Session 4: Customization Lab (Ounce Lab), August 27th 2009

- 1.1.8 Session 5: Tool Adoption and Deployment, September 17th 2009

- 1.1 Logistics Preparation for hands on

- 2 Code Review and Static Analysis with tools

- 3 Organizational

- 4 Customization

Static Analysis Curriculum

- For an introduction to the OWASP Static Analysis (SA) Track goals, objectives, and session roadmap, please see this presentation.

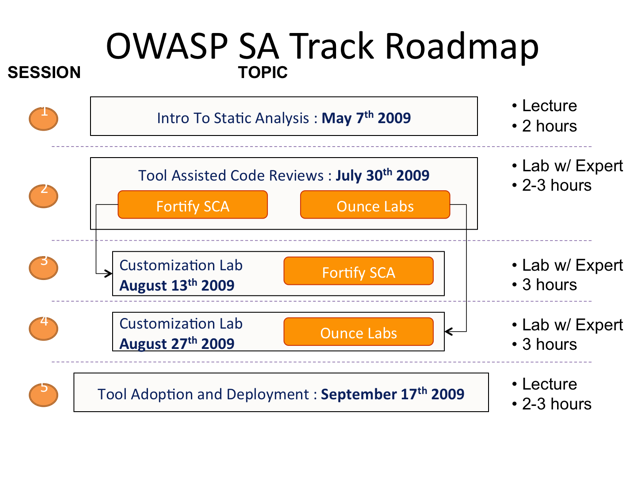

The following is the agenda of the OWASP Static Analysis track roadmap for the Northern Virginia Chapter.

Logistics Preparation for hands on

Classroom’s size estimate for hands on: 30 stations max. Physical number of students can be larger as people may want to pair up. But we will have to put a hard limit of 40 attendees.

Registration

Registration for sessions will be on first come and first served basis. But we will give preference to people who show regularity and sign up for many sessions. Students will also have to fill up a small interview before the session so the instructors get to know their skill level and motivation. Students are required to meet the prerequisites for the sessions that they sign for. We ask to the students to bring their laptop in the hands on session, and to have software such as SSH preinstalled. Basic knowledge about code is also required in all sessions, except the last one. We will start registration by email mid-June or earlier.

Student’s prerequisites

All students will need to bring their own laptop and use them as client to connect to the host machines; we will support windows users, MacOS and Unix. They should have at least 2 Gig of Ram, and have a version of SSH installed.

Tool license and Vendor IP

Vendors will need to provide tools license for the hands on sessions. Students will not have tool installation on their machine. They will be piloting copies of the tool in a remote VM image. This is NOT a competition. The purpose is NOT to compare tools, different source code will be picked for each vendor. This will mitigate side-by-side comparison of tool & findings. Vendors are not allowed to interfere with other vendors’ session or demo (Note: we have only 2 vendors: Fortify & Ounce Lab). Questions related to tool comparison between the present vendors are out of scope. Vendors are free to present features and particularities exclusive to their tools.

Session 1: Intro To Static Analysis, May 7th 2009

- Speaker: Dalci, Eric

- Time: 2 hours + open discussion

- Classroom size: This is open.

This presentation will give a taste about what Static Analysis is.

Session 2: Tool Assisted Code Reviews, July 30th 2009

- Speaker: Dalci, Eric, Bruce Mayhew (Ounce Lab) and Fortify (?)

- Time: 2hours and half

- Logistics: Hands on setup as in logistic section.

- Location: TBD

- Classroom size: 30 stations, 40 attendees max

This is an introduction course to two Static Analysis tools (Fortify SCA and Ounce Labs 6):

- Fortify will demo its tool and scan Webgoat.

- Ounce Lab will demo its tool, but we may scan a different project such as HacmeBank just to avoid tool comparison.

- We will have an open discussion session after each demo for students to ask questions to the vendors.

- Vendors should not interfere with each other’s session. Questions related to tool comparison will not be answered since this is not the goal of this session.

Session 3: Customization Lab (Fortify), August 13th 2009

- Speaker: Mike Ware

- Time: 3 hours

- Logistics: Hands on setup as in logistic section.

- Location: TBD

- Classroom size: 30 stations, 40 attendees max

- Prerequisite: Attended session 2

Mike will train the students on how to customize the Fortify Source Code Analyzer (SCA) Agenda (draft):

- Approach to auditing scan results to determine true positives and false positives (.5 hours)

- Custom rules (2 hours)

- Hands on examples of different rule types applied to code that resembles real business logic

- Data flow sources and sinks [private data sent to custom logger, turn web service entry points into data flow rules?]

- Data flow cleanse and pass through [cleanse: HTML escaping, pass through: third party library]

- Semantic [use of a sensitive API [getEmployeeSSN()]

- Structural [all Struts ActionForms must extend custom base ActionForm]

- Configuration [properties: data.encryption = off]

- Control flow [always call securityCheck() before downloadFile()]

- Filtering (.5 hours)

- Prioritizing remediation efforts

- Priority filters (e.g., P1, P2, etc)

- Isolating findings ("security controls" example)

- Authentication

- Authorization

- Data validation

- Session management

- Etc.

Session 4: Customization Lab (Ounce Lab), August 27th 2009

- Speaker: Nabil Hannan

- Time: 3 hours

- Logistics: Hands on setup as in logistic section.

- Location: TBD

- Classroom size: 30 stations, 40 attendees max

- Prerequisite: Attended session 2

Nabil will train the students on how to customize the Ounce Labs 6 tool Agenda (draft):

- Approach to auditing scan results to determine true positives and false positives (.5 hours)

- Custom rules (1.5 hours)

- Hands on examples of different rule types applied to code that resembles real business logic

- Data flow sources and sinks [private data sent to custom logger]

- Data flow cleanse [cleanse: HTML encoding]

- Semantic [use of a sensitive API e.g. getEmployeeSSN()]

- Filtering (.5 hours)

- Prioritizing remediation efforts

- Understanding the Ounce Vulnerability Matrix

- Modifying finding severity/category

- Isolating findings (using "bundles")

- Input Validation

- SQL Injection

- Cross-Site Scripting

- Etc.

- Reporting (.5 hours)

- Demonstrate compliance with industry regulations and best practices

- OWASP Top 10

- PCI

Session 5: Tool Adoption and Deployment, September 17th 2009

- Speaker: Shivang Trivedi

- Time: 2 hours

- Location: TBD

- Prerequisite: Preferably attended session 2, but not mandatory

- Classroom size: Open

Shivang will talk about integration of a Static Analysis tool into the SDLC. Agenda (draft):

- Tool Selection

- Flexible with Static Analysis and/or Penetration Testing

- Coverage

- Enterprise Support

- Quality of Security Findings

- Phases of Integration

- Pre-requisites

- Goals and Challenges

- Distribution of Roles and Responsibilities

- Considering LOE

- Model Per Activity

- Activity Flow

- Phase Transition

- Deployment Model

- Advantages

- Disadvantages

- Free and Handy Tools to

- Continuously Integrate

- Join activity flow

- Improvements and Lessons Learned

- Effective use of tool’s capabilities

- Expanding Coverage

- Analysis Techniques

- Improving Results Accuracy

Code Review and Static Analysis with tools

- What: Secure Code Review

- Who: Performed by Security Analysts

- Where it fits: BSIMM Secure Code Review

- Cost: Scales with depth, threat facing application, and application size/complexity

This article will answer the following questions about secure code review and use of static analysis tools:

- What are static analysis tools and how do I use them?

- How do I select a static analysis tool?

- How do I customize a static analysis tool?

- How do I scale my assessment practices with secure code review?

Organizational

How do I scale my assessment practices with secure code review?

Implementing a static analysis tool goes a long way to providing a force multiplier for organizations. The following presentation discusses a comprehensive set of steps organizations can undertake to successfully adopt such tools. The presentation discusses who should adopt the tool, what steps they should take, who they should involve, and how long/much it will cost.

Implementing a Static Analysis Tool.ppt

For those with existing assessment practices involving secure code review (whether or not those practices leverage tools) the question often becomes, "I can review an application, but how do I scale the practice to my entire organization without astronomic cost?" The following presentation addresses this question:

Maturing Assessment Through Static Analysis

Customization

People who believe that the value of static analysis is predominantly within their core capabilities "out of the box" come up incredibly short. By customizing your chosen tool you can expect:

- Dramatically better accuracy (increased true positives, decreased false positives, and decreased false negatives)

- Automated scanning for corporate security standards

- Automated scanning for an organization's top problems

- Visibility into adherence to (or inclusion of) sanctioned toolkits

The following presentation was given at the NoVA chapter in '06 and discusses deployment and customization:

Warning: this presentation is old and gives examples using the now defunct "CodeAssure" from what was then SecureSoftware.