This site is the archived OWASP Foundation Wiki and is no longer accepting Account Requests.

To view the new OWASP Foundation website, please visit https://owasp.org

Projects/OWASP Mobile Security Project -2015 Scratchpad

This is just a place to gather some ideas for the 2015 reworking of the Mobile Top Ten. It's totally unofficial open musings about truth, beauty, and justice.

- 1 Proposed Timeline

- 2 What is It?

- 3 Who is it For?

- 4 Comments on Submitted Data

- 5 Other Questions

- 6 Conclusions Drawn From Data

- 7 OWASP Category Elements

- 8 "Test Drive" Category Element

- 9 Top Ten Scratchpad

- 9.1 Improper Platform Usage

- 9.2 Insecure Data

- 9.3 Insecure Communication (Owner: Andrew Blaich)

- 9.4 Insecure Authentication

- 9.5 Insufficient Cryptography

- 9.6 Insecure Authorization

- 9.7 Client Code Quality Issues

- 9.8 Code Tampering (Owner: Jonathan Carter)

- 9.9 Reverse Engineering (Owner: Jonathan Carter)

- 9.10 Extraneous Functionality (Owner: Andrew Blaich)

Proposed Timeline

The content of the Mobile Top 10 will be refreshed at some cadence, and most probably not a short one. In between there will be needed changes as appropriate, but major changes will happen sparsely between "official releases".

In order to keep interest in the project and to offer deeper and more complete content, there is a proposal to supply architectural and code samples that illustrate the Top 10 items.

As a first milestone it is suggested that 6 months after the Top 10 is released we are able to do a refresh launch offering the initial version of these samples.

A set cadence for further refresh/release later on needs to be established as well.

What is It?

This is the "Mobile Top Ten" what? It's the top 10 "stuff people tend to screw up", but here are some important questions.

- Business risk or technical risk? The business risk would be something like "intellectual property unprotected" or "customer data exposed." A technical risk would be something like "data stored in plain text files."

- Root cause, or final impact? Often root causes are things like not encrypting when we should. Final impact is stuff like unintended data leaks. The problem is that some of these things are overlapping. Not every lack of crypto is a data leak, but many are.

- What threats are in scope? There are apps that simply do not care about protecting from malware, jailbreaking, etc. Think Yelp: it's just restaurant reviews. No financial impact, no reason to care about many client-side attacks. Plenty of apps do care about client-side attacks. E.g., banking, communications, health data. Many items hinge on whether or not you care about client side attacks. How do we capture this?

- If you care about client-side attacks, then failing to encrypt stuff is basically a data leakage.

- If you don't care about client-side attacks, then failing to encrypt stuff is kinda "gee you should do that".

- If you care about client-side attacks, there are probably some platform features that are not sufficient as-is: the app sandbox, etc. You probably want to be putting your own additional layer of encryptiong / protection, etc.

- If you don't care about client-side attacks, then you simply need to be using the standard APIs (keychain, app data storage, etc.) in the standard supported ways.

Who is it For?

Do we intend this to be a tool that infosec / appsec people use? Do we intend lay people to make use of it? (e.g., developers and non-mobile IT security people) What does the target audience need to get from it?

(Paco's opinion) We need to have a narrative: If you found functionality that does X, it is probably in bucket A, unless it is also doing (or not doing) Y, in which case that's bucket B.

Comments on Submitted Data

General

(Jason) By looking at some data sets it becomes clear there is a doctrine that some consultancies use to do mobile testing. Some did not contribute m1 data, because they consider mobile security client-only. The same applied to m10. In addition, a couple of datasets used CWE IDs. These were harder to parse because generic CWE's do not specify if the vuln is client or server (and in a lot of these cases the vuln could be either). As Paco stated, code quality, source level findings are hard to categorize as well.

BugCrowd

I see “storing passwords in the clear” as a very common finding among their data. It gets classifed as M5 poor authentication, M2 insecure data storage, M4 data leakage, and sometimes M6 (broken crypto).

I see “storing session tokens insecurely” as a common finding. It is getting classified as M9 (session handling) and M2 (insecure data storage). I wonder openly whether passwords and session tokens are really that different.

We see a lot of caching of non-password, non-session data. Some of it is done explicitly by the app, some of it is done by the mobile OS through snapshotting, backups, etc. Sometimes it is classified as “data leakage” (M4) and sometimes as insecure storage (M2). And what is interesting is that some of it is the result of the OS and some is the result of the app. Do we want to make that distinction in the T10?

MetaIntelli

They only have 18 distinct things they report on, though they have 111,000 data points. Two of the 18 things are double-counted. They appear to be categorised in both M3 and another one.

Other Questions

Communications issues are a problem a lot. But TLS and crypto are tightly coupled. “Communications issues" includes certificate pinning, weak TLS ciphers, improper cert validation, HTTP and plaintext protocols, and more. There’s a lot of overlap with “broken crypto” like using Base64 instead of encryption, hard coded keys/passwords, weak hash algorithms, and so on. How do we tease out “crypto” issues from “communications” issues from “insecure storage” issues?

I can imagine a heuristic like this:

- did you use crypto where you were supposed to, but the crypto primitive you chose wasn’t appropriate for the task? That’s broken crypto.

- Did you omit crypto entirely when you should have used it? That’s insecure comms or insecure storage.

Some findings are deeply mobile (e.g., intent hijacking, keychain issues, jailbreak/root detection, etc.). They’re really tied to their respective platforms. Is that a problem for us? Does it matter?

Conclusions Drawn From Data

These are conclusions proposed from the 2014 data.

At Least One New Category Is Needed

((Paco)) The "Other" category is not the least popular category. It's more popular, by an order of magnitude, than several others. This tells me that if we had a better category that captured "other" findings, it would be a benefit to the users of the top 10.

The Bottom 5 Categories account for 25% Or Less

The least popular 5 items are (where "1" is the least popular and "5" is 5th least popular or 6th most popular):

- M8: Security Decisions Via Untrusted Inputs

- M7: Client Side Injection

- M9: Improper Session Handling

- M6: Broken Cryptography

- M1: Weak Server Side Controls

Combined with the fact that the 3rd or 4th most popular category is "Other", this suggests that 2 or 3 of these are, in fact, not in the "top ten". They may be, for example, 11 and 12 or even higher.

The Existing Buckets are Hard To Use

A few contributors tried to categorise their findings into the existing MT10. When they did, they showed symptoms of difficulty. Some examples in the table below show how MetaIntelli flagged findings in two different categories, and BugCrowd flagged the same kind of finding in 3 different categories. This suggests that the existing MT10 is not clear enough about where these issues belong.

| Description | Contributor | Categories |

|---|---|---|

| The app is not verifying hostname, certificate matching and validity when doing SSL secure connections. | MetaIntelli | M3 and M9 |

| Contains URLs with not valid SSL certificates and/or chain of trust | MetaIntelli | M3 and M5 |

| Authentication cookies stored in cleartext in sqlite database | BugCrowd | M9 - Improper Session Handling |

| Blackberry app stores credentials in plaintext | BugCrowd | M2 - Insecure Data Storage |

| Credentials and sensitive information not secured on Windows Phone app | BugCrowd | M5 - Poor Authorization and Authentication |

Some Topics that Show Up But Are Hard To Place

There are a few things that show up in the contributed data that do not have a good category to go into.

Code Level Findings

If someone is doing bad C coding (e.g., strcpy() and similar), there is no good bucket for that. Likewise, misusing the platform APIs (e.g., Android, iOS, etc.) is not well covered. It's hard to place violations of platform best practices (e.g., with intents and broadcasts and so on).

Most of the Android developers use "printStackTrace" in their code, which is a bad practice. Even the Android APK is released in DEBUG mode.

OWASP Category Elements

This section explores the critical elements that must be included within all OWASP Mobile Top Ten 2015 categories. Each element is listed below along with a brief description of the appropriate content. The goal is to "test drive" this list of required elements with a sample, non-commital category to verify that the elements adequately cover what is needed within each of the OWASP Mobile Top Ten 2015 categories. Each of these elements has been initially based off the Google Hangout meeting held on April 1 2015.

Label (generic and audience-specific labels)

This element is a unique identifier for the bucket of issues that belong together. In the OWASP Mobile Top Ten 2015 Survey, we found that there were many different audiences that may use the OWASP Mobile Top Ten 2015 for different purposes. As always, there needs to be a generic label that uniquely identifies each bucket of issues. However, there should also be additional labels for the same category that are audience-specific to make it easier for different audiences to identify the category they are looking for. These additional labels will help clarify and differentiate the categories.

Overview Text

This element is a generic education piece (100-200 words) around the nature of the category. It should describe the nature of the category as it relates to the different audiences (e.g., penetration testers; software engineers). It should include external references and educational links to other sources around the nature of the problem.

Prominent Characteristics

This element eliminates any uncertainty or ambiguity that may result from vagueness / broadness in the category labels. It should include "headline vulnerabilities" that made it into the media and help each audience get a "gut feel" for what belongs to this category. In the 2014 list, we found that there were many instances where the same vulnerabilities were spread across many different categories. Hence, the need for this additional element along with a strive towards better categorisation.

Risk

This element helps clarify how critical the category of issues is from both a business and technical perspective. During the meeting of April 1 2015, it was proposed that the CVSS classification scheme may be used here as a way of helping guide the audiences in prioritisation of the fixing of the issues.

Examples

This element gives coding-specific examples, CVE vulnerabilities and newsworthy events that fall into this category. This element will help clarify to the difference audiences what fits into this category.

Audience Specific Guidance

This element gives practical advice and guidance for category remediation that is relevant to each audience (100-200 words). Each audience may approach the issue of remediation from very different perspectives. For example, an auditor may simply want to know more about whether or not remediation is appropriate. Meanwhile, a software engineer may want to have coding-specific advice. Each audience's concerns must be addressed in this element.

"Test Drive" Category Element

Here, we are testing a particular category to see whether or not the proposed elements for each category are adequate to address each audience's needs. It is important to note that this is a sample category and is not a formal commitment to any final category in the OWASP Mobile Top Ten 2015. It is strictly meant for testing purposes. Members who would like to 'own' particular elements in the category below should 'sign up' and add content where appropriate.

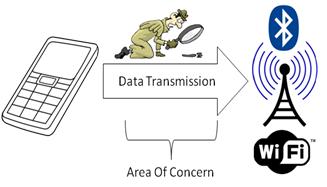

Insecure Data Transmission

Generic Label

Owner(s): Jonathan Carter

Audience-Specific Labels

Owner(s): Jonathan Carter

Overview Text

Mobile devices and applications can be used to transmit data over many communication channels. These channels include, but are not limited to: "Wireless Cellular Protocols (2G, 3G, 4G, 5G, etc.), WiFi(Ad-Hoc, Infrastructure, and Peer to Peer modes), Bluetooth (and it's variants), Infrared, RFID, NFC, etc.

Vulnerabilities in this category focus on weaknesses associated with "data in motion" when transmission occurs over the above noted communication channels. These vulnerabilities can arise as a result of one or more failures in adequately securing the communications channels including but not limited to: plain-text transmission of sensitive data (i.e. not utilizing adequate encryption over the communication channel), insufficient authentication of encrypted channel endpoints (i.e. Failing to confirm the identity of the remote end-point you are attempting secure transmissions with) and/or insufficiently/inadequately establishing the encryption communications protocol (i.e. using weak encryption secrets, ciphers and/or protocols).

Owner(s): Milan Singh Thakur, Amin Lalji

Prominent Characteristics

Owner(s): David Fern

The key differentiator of this vulnerability is that it is concerned about unencrypted or improperly encrypted data being stolen during transmission, not on the device but through the airwaves.

Insecure Data Transmission should not be confused with others in the top 10 such as:

M3: Insufficient Transport Layer Protection – I DO NOT SEE ANT DIFFERENCE

M4: Unintended Data Leakage – Unintended data leakage is a result of insecure data transmission. Once data has been stolen and interpreted it may contain information that is valuable to attackers (leaked).

M6: Broken Cryptography – While broken cryptography does relate to data that has been improperly encrypted, and it may be an input or causes of insecure data transmission, it focuses on the encryption process/technique itself on the device transmitting the data.

Risk

The risk attributed to mobile applications that suffer from this category of vulnerabilities rely upon the "business" value of the data being transmitted - however from a technical perspective, exploitation of this vulnerability can lead to a loss of confidentiality (i.e. access to confidential/sensitive data by unauthorized individuals/systems) and possibly a loss of integrity (i.e. data may be changed in transit).

The ease of exploitation of this type vulnerability varies based on the communication channel, but generally speaking, is not an overly complex attack to successfully execute (i.e. when sensitive data is transmitted over WiFi in clear-text, it is trivial to intercept and review).

Mitigating the risks associated with this category of vulnerabilities can take various forms depending on the transmission protocol being used.

- Include examples here for protocol level security implemented with Layer 6/7 (TLS/HTTPS), WiFi, Bluetooth, NFC, RFID, etc.)

Owner(s): Rajvinder Singh, Milan Singh Thakur, Amin Lalji

Examples

Owner(s): Adam Kliarsky

Audience-Specific Guidance

Owner(s): Andi Pannell, Amin Lalji

From both a development and auditing stance, the easiest way to test this is to insert a proxy (such as burp) between the device running the mobile app and the wifi connection. Looking for data being transferred over plain text, as well as identifying weak procotols (SSLv2, SSLv3) and ciphers (RC4, MD5) being used to transmit data.

Top Ten Scratchpad

These are proposed new categories for the 2015 Mobile Top Ten. Edit them. Discuss them. Include inline comments with attirbution where possible.

Improper Platform Usage

Generic Label

Audience-Specific Label

Overview Text

This category would cover misuse or failure to use platform security controls. It might include Android intents, platform permissions, misuse of TouchID, the Keychain, or some other security control that is part of the mobile operating system.

Prominent Characteristics

Risk

Examples

Insecure Data

Generic Label

Audience-Specific Label

Overview Text

This new category will be a combination of M2 + M4 from Mobile Top Ten 2014.

Prominent Characteristics

Risk

Examples

Insecure Communication (Owner: Andrew Blaich)

Generic Label

Audience-Specific Label

Overview Text

This covers poor handshaking, incorrect SSL versions, weak negotiation, cleartext communication of sensitive assets, etc.

Prominent Characteristics

Risk

Examples

Insecure Authentication

Generic Label

Audience-Specific Label

Overview Text

Broken notions of authenticating the end user or bad session management.

Prominent Characteristics

Risk

Examples

Insufficient Cryptography

Generic Label

Audience-Specific Label

Overview Text

The code applies cryptography to a sensitive information asset. However, the cryptographic algorithm is flawed in some way.

Prominent Characteristics

Risk

Examples

Insecure Authorization

Generic Label

Audience-Specific Label

Overview Text

This used to be "Client Side Injection", one of our lesser-used categories. This is a category to capture any failures in authorization (e.g., authorization decisions in the client side, forced browsing, etc.). It is distinct from authentication issues (e.g., device enrolment, user identification, etc.).

Prominent Characteristics

Risk

Examples

Client Code Quality Issues

Generic Label

Audience-Specific Label

Overview Text

This was "Security Decisions Via Untrusted Inputs", one of our lesser-used categories. This would be the catch-all for code-level implementation problems in the mobile client. That's distinct from server-side coding mistakes. This would capture things like buffer overflows, format string vulnerabilities, and various other code-level mistakes where the solution is to rewrite some code that's running on the mobile device.

Prominent Characteristics

Risk

Examples

Code Tampering (Owner: Jonathan Carter)

Generic Label

Audience-Specific Label

Overview Text

This category covers binary patching, local resource modification, method hooking, method swizzling, and dynamic memory modification.

Prominent Characteristics

Risk

Examples

Reverse Engineering (Owner: Jonathan Carter)

Generic Label

Audience-Specific Label

Overview Text

This category includes analysis of the final core binary to determine its source code, libraries, algorithms, and other assets. Think about using IDA Pro, Hopper, otool, and other binary inspection tools used to reverse engineer the application. This is valuable to a hacker on its own.

Prominent Characteristics

Risk

Examples

Extraneous Functionality (Owner: Andrew Blaich)

Generic Label

Audience-Specific Label

Overview Text

Often, developers include hidden backdoor functionality or other internal development security controls that are not intended to be released into a production environment. For example, a developer may accidentally include a password as a comment in a hybrid app. Another example includes disabling of 2-factor authentication during testing.

Prominent Characteristics

Risk

Examples

(Jason) Regarding m10 - Several submissions reported m10 vulns. Unfortunately some were types of services such as binary reputation scanners, that do not have the ability to check for dynamic or code level findings. In order to fix this i recommend a name change or re-working of this category. I want to separate out the delineation of Anti-exploit vs Code Obfuscation/Anti-reversing. Must talk to group about this.